The sights and insights of examiners in objective structured clinical examinations

- Affiliations

-

- 1Clinical Skills Teaching Unit, Prince of Wales Hospital, Sydney, Australia.

- 2Office of Medical Education, University of New South Wales, Sydney, Australia. b.shulruf@unsw.edu.au

- 3University of New South Wales, Sydney, Australia.

- 4Prince of Wales Clinical School, University of New South Wales, Sydney, Australia.

- 5Centre for Medical and Health Sciences Education, University of Auckland, Auckland, New Zealand.

- KMID: 2406697

- DOI: http://doi.org/10.3352/jeehp.2017.14.34

Abstract

- PURPOSE

The objective structured clinical examination (OSCE) is considered to be one of the most robust methods of clinical assessment. One of its strengths lies in its ability to minimise the effects of examiner bias due to the standardisation of items and tasks for each candidate. However, OSCE examiners' assessment scores are influenced by several factors that may jeopardise the assumed objectivity of OSCEs. To better understand this phenomenon, the current review aims to determine and describe important sources of examiner bias and the factors affecting examiners' assessments.

METHODS

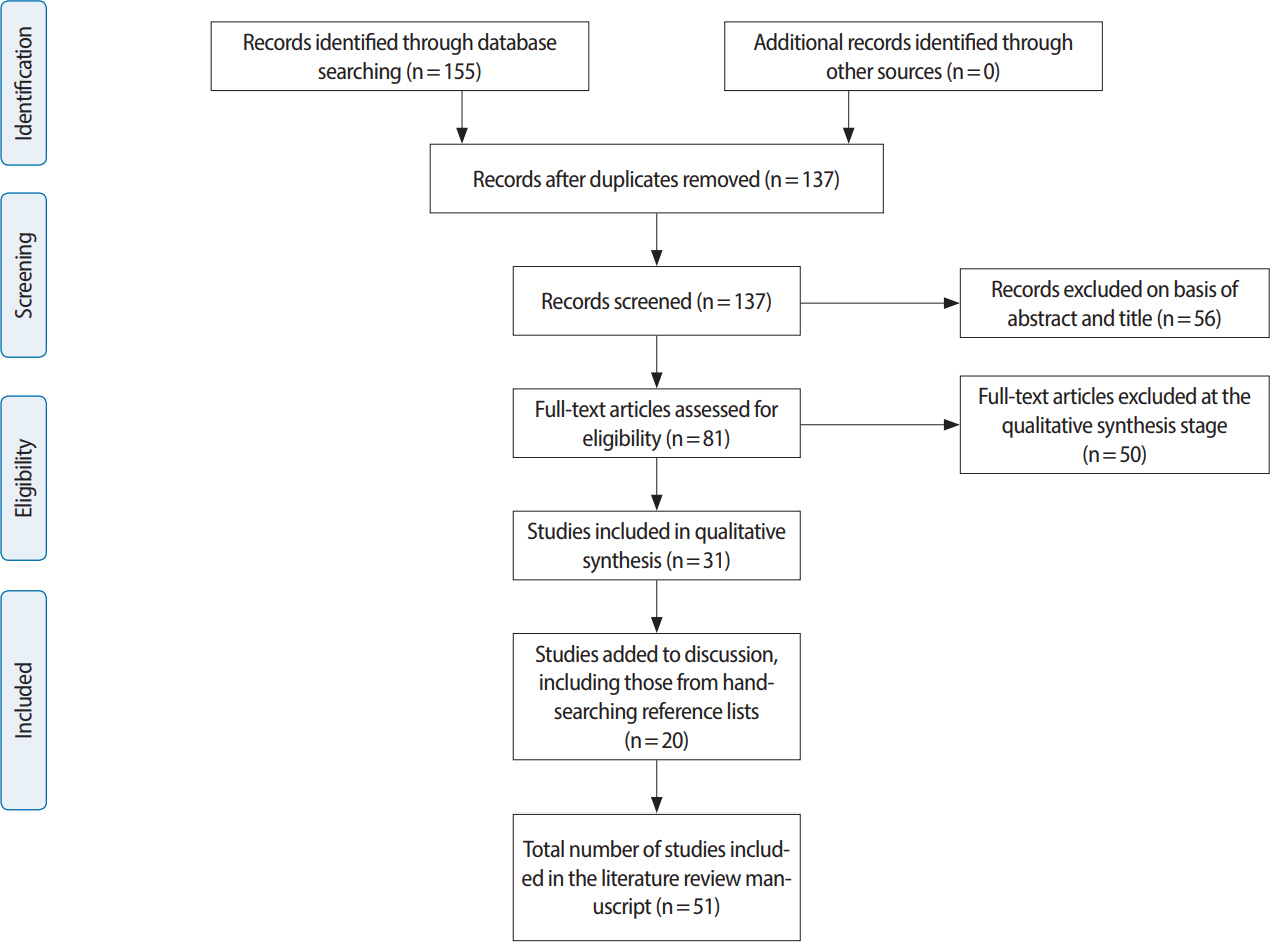

We performed a narrative review of the medical literature using Medline. All articles meeting the selection criteria were reviewed, with salient points extracted and synthesised into a clear and comprehensive summary of the knowledge in this area.

RESULTS

OSCE examiners' assessment scores are influenced by factors belonging to 4 different domains: examination context, examinee characteristics, examinee-examiner interactions, and examiner characteristics. These domains are composed of several factors including halo, hawk/dove and OSCE contrast effects; the examiner's gender and ethnicity; training; lifetime experience in assessing; leadership and familiarity with students; station type; and site effects.

CONCLUSION

Several factors may influence the presumed objectivity of examiners' assessments, and these factors need to be addressed to ensure the objectivity of OSCEs. We offer insights into directions for future research to better understand and address the phenomenon of examiner bias.

Keyword

MeSH Terms

Figure

Cited by 3 articles

-

Comparison of the effects of simulated patient clinical skill training and student roleplay on objective structured clinical examination performance among medical students in Australia

Silas Taylor, Matthew Haywood, Boaz Shulruf, Sun Huh

J Educ Eval Health Prof. 2019;16:3. doi: 10.3352/jeehp.2019.16.3.Assessment methods and the validity and reliability of measurement tools in online objective structured clinical examinations: a systematic scoping review

Jonathan Zachary Felthun, Silas Taylor, Boaz Shulruf, Digby Wigram Allen, Sun Huh

J Educ Eval Health Prof. 2021;18:11. doi: 10.3352/jeehp.2021.18.11.Pre-COVID and COVID experience of objective structured clinical examination as a learning tool for post-graduate residents in Obstetrics & Gynecology-a quality improvement study

Charu Sharma, Pratibha Singh, Shashank Shekhar, Abhishek Bhardwaj, Manisha Jhirwal, Navdeep Kaur Ghuman, Meenakshi Gothwal, Garima Yadav, Priyanka Kathuria, Vibha Mishra

Obstet Gynecol Sci. 2023;66(4):316-326. doi: 10.5468/ogs.22266.

Reference

-

References

1. Brennan PA, Croke DT, Reed M, Smith L, Munro E, Foulkes J, Arnett R. Does changing examiner stations during UK postgraduate surgery objective structured clinical examinations influence examination reliability and candidates’ scores? J Surg Educ. 2016; 73:616–623. https://doi.org/10.1016/j.jsurg.2016.01.010.

Article2. Mitchell ML, Henderson A, Groves M, Dalton M, Nulty D. The objective structured clinical examination (OSCE): optimising its value in the undergraduate nursing curriculum. Nurse Educ Today. 2009; 29:398–404. https://doi.org/10.1016/j.nedt.2008.10.007.

Article3. Sakurai H, Kanada Y, Sugiura Y, Motoya I, Wada Y, Yamada M, Tomita M, Tanabe S, Teranishi T, Tsujimura T, Sawa S, Okanishi T. OSCEbased clinical skill education for physical and occupational therapists. J Phys Ther Sci. 2014; 26:1387–1397. https://doi.org/10.1589/jpts.26.1387.

Article4. Yap K, Bearman M, Thomas N, Hay M. Clinical psychology students’ experiences of a pilot objective structured clinical examination. Aust Psychol. 2012; 47:165–173. https://doi.org/10.1111/j.1742-9544.2012.00078.x.

Article5. Lin CW, Tsai TC, Sun CK, Chen DF, Liu KM. Power of the policy: how the announcement of high-stakes clinical examination altered OSCE implementation at institutional level. BMC Med Educ. 2013; 13:8. https://doi.org/10.1186/1472-6920-13-8.

Article6. Hope D, Cameron H. Examiners are most lenient at the start of a twoday OSCE. Med Teach. 2015; 37:81–85. https://doi.org/10.3109/0142159X.2014.947934.

Article7. Wood TJ. Exploring the role of first impressions in rater-based assessments. Adv Health Sci Educ Theory Pract. 2014; 19:409–427. https://doi.org/10.1007/s10459-013-9453-9.

Article8. Fuller R, Homer M, Pell G, Hallam J. Managing extremes of assessor judgment within the OSCE. Med Teach. 2017; 39:58–66. https://doi.org/10.1080/0142159X.2016.1230189.

Article9. Harasym PH, Woloschuk W, Cunning L. Undesired variance due to examiner stringency/leniency effect in communication skill scores assessed in OSCEs. Adv Health Sci Educ Theory Pract. 2008; 13:617–632. https://doi.org/10.1007/s10459-007-9068-0.

Article10. Clauser BE, Harik P, Margolis MJ, McManus IC, Mollon J, Chis L, Williams S. An empirical examination of the impact of group discussion and examinee performance information on judgments made in the Angoff standard-setting procedure. Appl Meas Educ. 2008; 22:1–21. https://doi.org/10.1080/08957340802558318.

Article11. Clauser BE, Mee J, Baldwin SG, Margolis MJ, Dillon GF. Judges’ use of examinee performance data in an Angoff standard-setting exercise for a medical licensing examination: an experimental study. J Educ Meas. 2009; 46:390–407. https://doi.org/10.1111/j.1745-3984.2009.00089.x.

Article12. Hurtz GM, Patrick Jones J. Innovations in measuring rater accuracy in standard setting: assessing “Fit” to item characteristic curves. Appl Meas Educ. 2009; 22:120–143. https://doi.org/10.1080/08957340902754601.

Article13. Stroud L, Herold J, Tomlinson G, Cavalcanti RB. Who you know or what you know?: effect of examiner familiarity with residents on OSCE scores. Acad Med. 2011; 86(10 Suppl):S8–S11. https://doi.org/10.1097/ACM.0b013e31822a729d.

Article14. Finn Y, Cantillon P, Flaherty G. Exploration of a possible relationship between examiner stringency and personality factors in clinical assessments: a pilot study. BMC Med Educ. 2014; 14:1052. https://doi.org/10.1186/s12909-014-0280-3.

Article15. Hill F, Kendall K, Galbraith K, Crossley J. Implementing the undergraduate mini-CEX: a tailored approach at Southampton University. Med Educ. 2009; 43:326–334. https://doi.org/10.1111/j.1365-2923.2008.03275.x.

Article16. Chahine S, Holmes B, Kowalewski Z. In the minds of OSCE examiners: uncovering hidden assumptions. Adv Health Sci Educ Theory Pract. 2016; 21:609–625. https://doi.org/10.1007/s10459-015-9655-4.

Article17. Shulruf B, Wilkinson T, Weller J, Jones P, Poole P. Insights into the Angoff method: results from a simulation study. BMC Med Educ. 2016; 16:134. https://doi.org/10.1186/s12909-016-0656-7.

Article18. Chesser A, Cameron H, Evans P, Cleland J, Boursicot K, Mires G. Sources of variation in performance on a shared OSCE station across four UK medical schools. Med Educ. 2009; 43:526–532. https://doi.org/10.1111/j.1365-2923.2009.03370.x.

Article19. Moher D, Liberati A, Tetzlaff J, Altman DG; PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009; 6:e1000097. https://doi.org/10.1371/journal.pmed.1000097.

Article20. Iramaneerat C, Yudkowsky R, Myford CM, Downing SM. Quality control of an OSCE using generalizability theory and many-faceted Rasch measurement. Adv Health Sci Educ Theory Pract. 2008; 13:479–493. https://doi.org/10.1007/s10459-007-9060-8.

Article21. Iramaneerat C, Yudkowsky R. Rater errors in a clinical skills assessment of medical students. Eval Health Prof. 2007; 30:266–283. https://doi.org/10.1177/0163278707304040.

Article22. Wood TJ, Chan J, Humphrey-Murto S, Pugh D, Touchie C. The influence of first impressions on subsequent ratings within an OSCE station. Adv Health Sci Educ Theory Pract. 2017; 22:969–983. https://doi.org/10.1007/s10459-016-9736-z.

Article23. Bartman I, Smee S, Roy M. A method for identifying extreme OSCE examiners. Clin Teach. 2013; 10:27–31. https://doi.org/10.1111/j.1743-498X.2012.00607.x.

Article24. Denney M, Wakeford R. Do role-players affect the outcome of a highstakes postgraduate OSCE, in terms of candidate sex or ethnicity?: results from an analysis of the 52,702 anonymised case scores from one year of the MRCGP clinical skills assessment. Educ Prim Care. 2016; 27:39–43. https://doi.org/10.1080/14739879.2015.1113724.

Article25. Denney ML, Freeman A, Wakeford R. MRCGP CSA: are the examiners biased, favouring their own by sex, ethnicity, and degree source? Br J Gen Pract. 2013; 63:e718–725. https://doi.org/10.3399/bjgp13X674396.

Article26. McManus IC, Elder AT, Dacre J. Investigating possible ethnicity and sex bias in clinical examiners: an analysis of data from the MRCP(UK) PACES and nPACES examinations. BMC Med Educ. 2013; 13:103. https://doi.org/10.1186/1472-6920-13-103.

Article27. Taylor S, Shulruf B. Australian medical students have fewer opportunities to do physical examination of peers of the opposite gender. J Educ Eval Health Prof. 2016; 13:42. https://doi.org/10.3352/jeehp.2016.13.42.

Article28. Casey M, Wilkinson D, Fitzgerald J, Eley D, Connor J. Clinical communication skills learning outcomes among first year medical students are consistent irrespective of participation in an interview for admission to medical school. Med Teach. 2014; 36:640–642. https://doi.org/10.3109/0142159X.2014.907880.

Article29. Swygert KA, Cuddy MM, van Zanten M, Haist SA, Jobe AC. Gender differences in examinee performance on the step 2 clinical skills data gathering (DG) and patient note (PN) components. Adv Health Sci Educ Theory Pract. 2012; 17:557–571. https://doi.org/10.1007/s10459-011-9333-0.

Article30. Cuddy MM, Swygert KA, Swanson DB, Jobe AC. A multilevel analysis of examinee gender, standardized patient gender, and United States medical licensing examination step 2 clinical skills communication and interpersonal skills scores. Acad Med. 2011; 86(10 Suppl):S17–S20. https://doi.org/10.1097/ACM.0b013e31822a6c05.

Article31. Graf J, Smolka R, Simoes E, Zipfel S, Junne F, Holderried F, Wosnik A, Doherty AM, Menzel K, Herrmann-Werner A. Communication skills of medical students during the OSCE: gender-specific differences in a longitudinal trend study. BMC Med Educ. 2017; 17:75. https://doi.org/10.1186/s12909-017-0913-4.

Article32. Schleicher I, Leitner K, Juenger J, Moeltner A, Ruesseler M, Bender B, Sterz J, Schuettler KF, Koenig S, Kreuder JG. Examiner effect on the objective structured clinical exam - a study at five medical schools. BMC Med Educ. 2017; 17:71. https://doi.org/10.1186/s12909-017-0908-1.

Article33. Esmail A, Roberts C. Academic performance of ethnic minority candidates and discrimination in the MRCGP examinations between 2010 and 2012: analysis of data. BMJ. 2013; 347:f5662. https://doi.org/10.1136/bmj.f5662.

Article34. Stupart D, Goldberg P, Krige J, Khan D. Does examiner bias in undergraduate oral and clinical surgery examinations occur? S Afr Med J. 2008; 98:805–807.35. White AA 3rd, Hoffman HL. Culturally competent care education: overview and perspectives. J Am Acad Orthop Surg. 2007; 15 Suppl 1:S80–S85. https://doi.org/10.5435/00124635-200700001-00018.

Article36. Shulruf B, Hattie J, Dixon R. Factors affecting responses to Likert type questionnaires: introduction of the ImpExp, a new comprehensive model. Soc Psychol Educ. 2008; 11:59–78. https://doi.org/10.1007/s11218-007-9035-x.

Article37. Sciolla AF, Lu FG. Cultural competence for international medical graduate physicians: a perspective. In : Rao NR, Roberts LW, editors. International medical graduate physicians: a guide to training. Cham: Springer International Publishing;2016. p. 283–303.38. McLaughlin K, Ainslie M, Coderre S, Wright B, Violato C. The effect of differential rater function over time (DRIFT) on objective structured clinical examination ratings. Med Educ. 2009; 43:989–992. https://doi.org/10.1111/j.1365-2923.2009.03438.x.

Article39. Yeates P, Moreau M, Eva K. Are Examiners’ judgments in OSCE-style assessments influenced by contrast effects? Acad Med. 2015; 90:975–980. https://doi.org/10.1097/ACM.0000000000000650.

Article40. Schuwirth LW, van der Vleuten CP. General overview of the theories used in assessment: AMEE guide no. 57. Med Teach. 2011; 33:783–797. https://doi.org/10.3109/0142159X.2011.611022.

Article41. Sibbald M, Panisko D, Cavalcanti RB. Role of clinical context in residents’ physical examination diagnostic accuracy. Med Educ. 2011; 45:415–421. https://doi.org/10.1111/j.1365-2923.2010.03896.x.

Article42. Pell G, Homer MS, Roberts TE. Assessor training: its effects on criterion-based assessment in a medical context. Int J Res Method Educ. 2008; 31:143–154. https://doi.org/10.1080/17437270802124525.

Article43. Boursicot KA, Roberts TE, Pell G. Using borderline methods to compare passing standards for OSCEs at graduation across three medical schools. Med Educ. 2007; 41:1024–1031. https://doi.org/10.1111/j.1365-2923.2007.02857.x.

Article44. Humphrey-Murto S, Smee S, Touchie C, Wood TJ, Blackmore DE. A comparison of physician examiners and trained assessors in a high-stakes OSCE setting. Acad Med. 2005; 80(10 Suppl):S59–S62. https://doi.org/10.1097/00001888-200510001-00017.

Article45. Wong ML, Fones CS, Aw M, Tan CH, Low PS, Amin Z, Wong PS, Goh PS, Wai CT, Ong B, Tambyah P, Koh DR. Should non-expert clinician examiners be used in objective structured assessment of communication skills among final year medical undergraduates? Med Teach. 2007; 29:927–932. https://doi.org/10.1080/01421590701601535.

Article46. Schwartzman E, Hsu DI, Law AV, Chung EP. Assessment of patient communication skills during OSCE: examining effectiveness of a training program in minimizing inter-grader variability. Patient Educ Couns. 2011; 83:472–477. https://doi.org/10.1016/j.pec.2011.04.001.

Article47. Monteiro SD, Walsh A, Grierson LE. OSCE circuit performance effects: does circuit order influence scores? Med Teach. 2016; 38:98–100. https://doi.org/10.3109/0142159X.2015.1075647.

Article48. Pell G, Homer M, Fuller R. Investigating disparity between global grades and checklist scores in OSCEs. Med Teach. 2015; 37:1106–1113. https://doi.org/10.3109/0142159X.2015.1009425.

Article49. Malau-Aduli BS, Teague PA, D’Souza K, Heal C, Turner R, Garne DL, van der Vleuten C. A collaborative comparison of objective structured clinical examination (OSCE) standard setting methods at Australian medical schools. Med Teach. 2017; 39:1261–1267. https://doi.org/10.1080/0142159X.2017.1372565.

Article50. Gingerich A, Kogan J, Yeates P, Govaerts M, Holmboe E. Seeing the ‘black box’ differently: assessor cognition from three research perspectives. Med Educ. 2014; 48:1055–1068. https://doi.org/10.1111/medu.12546.

Article51. Govaerts MJ, van der Vleuten CP, Schuwirth LW, Muijtjens AM. Broadening perspectives on clinical performance assessment: rethinking the nature of in-training assessment. Adv Health Sci Educ Theory Pract. 2007; 12:239–260. https://doi.org/10.1007/s10459-006-9043-1.

Article52. Gauthier G, St-Onge C, Tavares W. Rater cognition: review and integration of research findings. Med Educ. 2016; 50:511–522. https://doi.org/10.1111/medu.12973.

Article

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Differences between Scores Assessed by Examiners and Examinees on Objective Structured Clinical Examination

- Improving process aspect of oral examination as assessment tool in undergraduate biochemistry by introducing structured oral examination: an observational study in India based on a survey among stakeholders

- Assessment methods and the validity and reliability of measurement tools in online objective structured clinical examinations: a systematic scoping review

- Using Objective Structured Clinical Examinations(OSCEs) and Standardized Patients(SPs) in Neuro-Psychiatric Education

- The efficacy of peer assessment in objective structured clinical examinations for formative feedback: a preliminary study