Clin Endosc.

2019 Jul;52(4):328-333. 10.5946/ce.2018.172.

Recent Development of Computer Vision Technology to Improve Capsule Endoscopy

- Affiliations

-

- 1Digestive Disease Center, Institute for Digestive Research, Department of Internal Medicine, Soonchunhyang University College of Medicine, Seoul, Korea.

- 2Intelligent Image Processing Research Center, Korea Electronics Technology Institute (KETI), Seongnam, Korea.

- 3Department of Internal Medicine, Dongguk University Ilsan Hospital, Dongguk University College of Medicine, Goyang, Korea. limyj@dumc.or.kr

- 4Division of Gastroenterology and Hepatology, Department of Internal Medicine, Institute of Gastrointestinal Medical Instrument Research, Korea University College of Medicine, Seoul, Korea.

- KMID: 2455628

- DOI: http://doi.org/10.5946/ce.2018.172

Abstract

- Capsule endoscopy (CE) is a preferred diagnostic method for analyzing small bowel diseases. However, capsule endoscopes capture a sparse number of images because of their mechanical limitations. Post-procedural management using computational methods can enhance image quality. Additional information, including depth, can be obtained by using recently developed computer vision techniques. It is possible to measure the size of lesions and track the trajectory of capsule endoscopes using the computer vision technology, without requiring additional equipment. Moreover, the computational analysis of CE images can help detect lesions more accurately within a shorter time. Newly introduced deep leaning-based methods have shown more remarkable results over traditional computerized approaches. A large-scale standard dataset should be prepared to develop an optimal algorithms for improving the diagnostic yield of CE. The close collaboration between information technology and medical professionals is needed.

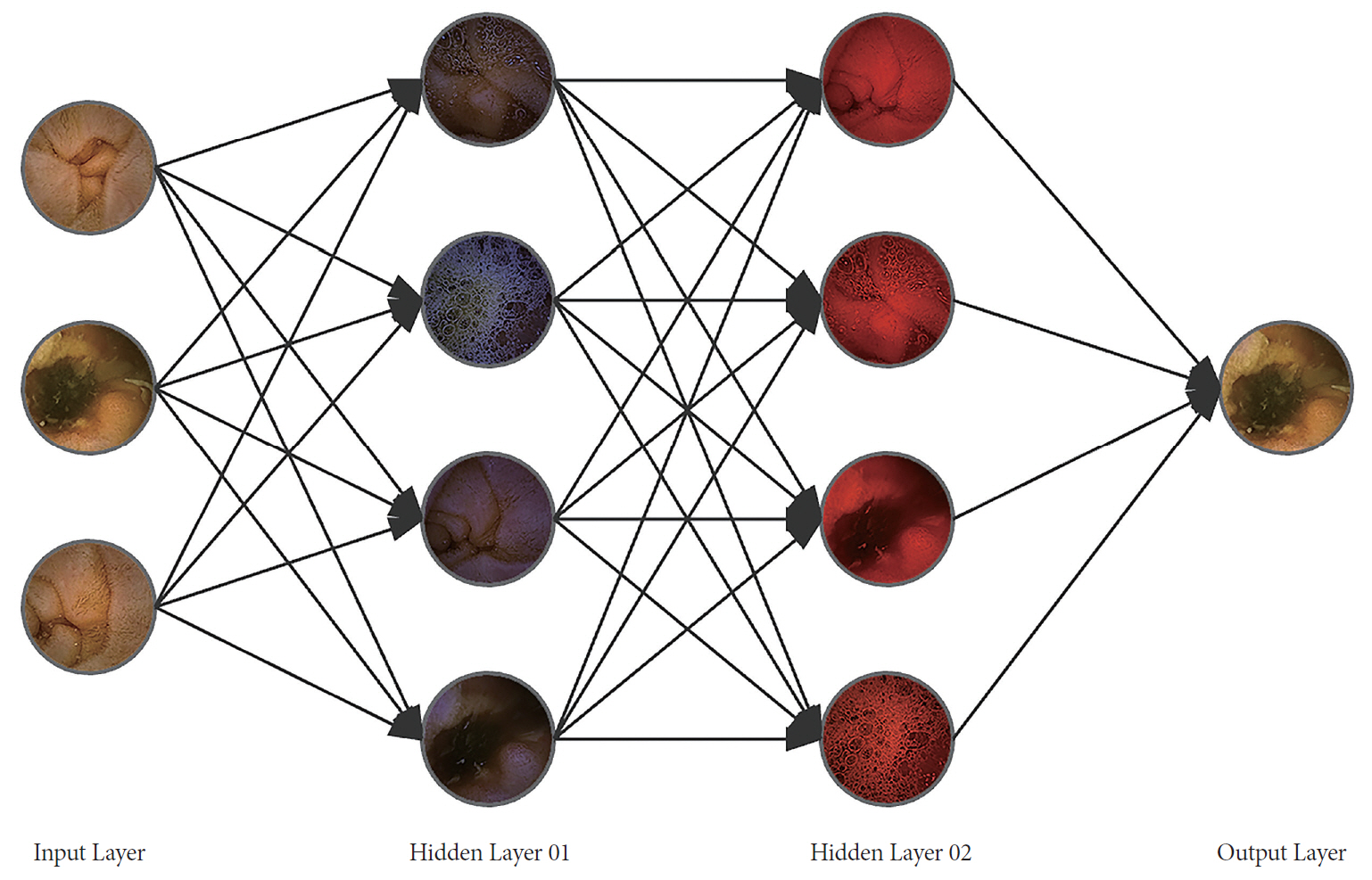

Figure

Reference

-

1. Shim KN, Jeon SR, Jang HJ, et al. Quality indicators for small bowel capsule endoscopy. Clin Endosc. 2017; 50:148–160.

Article2. Iakovidis DK, Koulaouzidis A. Software for enhanced video capsule endoscopy: challenges for essential progress. Nat Rev Gastroenterol Hepatol. 2015; 12:172–186.

Article3. Jia X, Meng MQ. Gastrointestinal bleeding detection in wireless capsule endoscopy images using handcrafted and CNN features. In : 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); 2017 Jul 11-15; Seogwipo, Korea. Piscataway (NY): IEEE;2017. p. 3154–3157.

Article4. Yuan Y, Meng MQ. Deep learning for polyp recognition in wireless capsule endoscopy images. Med Phys. 2017; 44:1379–1389.

Article5. Obukhova N, Motyko A, Pozdeev A, Timofeev B. Review of noise reduction methods and estimation of their effectiveness for medical endoscopic images processing. In : 2018 22nd Conference of Open Innovations Association (FRUCT); 2018 May 15-18; Jyvaskyla, Finland. Piscataway (NY): IEEE;2018. p. 204–210.

Article6. Gopi VP, Palanisamy P, Niwas SI. Capsule endoscopic colour image denoising using complex wavelet transform. In : Venugopal KR, Patnaik LM, editors. Wireless networks and computational intelligence. Berlin: Springer-Verlag Berlin Heidelberg;2012. p. 220–229.7. Liu H, Lu W, Meng MQ. De-blurring wireless capsule endoscopy images by total variation minimization. In : Proceedings of 2011 IEEE Pacific Rim Conference on Communications, Computers and Signal Processing; 2011 Aug 23-26; Victoria, Canada. Piscataway (NY): IEEE;2011. p. 102–106.

Article8. Peng L, Liu S, Xie D, Zhu S, Zeng B. Endoscopic video deblurring via synthesis. In : 2017 IEEE Visual Communications and Image Processing (VCIP); 2017 Dec 10-13; St. Petersburg (FL), USA. Piscataway (NY): IEEE;2017. p. 1–4.

Article9. Duda K, Zielinski T, Duplaga M. Computationally simple super-resolution algorithm for video from endoscopic capsule. In : 2008 International Conference on Signals and Electronic Systems; 2008 Sep 14-17; Krakow, Poland. Piscataway (NY): IEEE;2008. p. 197–200.

Article10. Häfner M, Liedlgruber M, Uhl A. POCS-based super-resolution for HD endoscopy video frames. In : Proceedings of the 26th IEEE International Symposium on Computer-Based Medical Systems; 2013 Jun 20-22; Porto, Portugal. Piscataway (NY): IEEE;2013. p. 185–190.

Article11. Häfner M, Liedlgruber M, Uhl A, Wimmer G. Evaluation of super-resolution methods in the context of colonic polyp classification. In : 2014 12th International Workshop on Content-Based Multimedia Indexing (CBMI); 2014 Jul 18-20; Klagenfurt, Austria. Piscataway (NY): IEEE;2014. p. 1–6.

Article12. Singh S, Gulati T. Upscaling capsule endoscopic low resolution images. International Journal of Advanced Research in Computer Science and Software Engineering. 2014; 4:40–46.13. Wang Y, Cai C, Zou YX. Single image super-resolution via adaptive dictionary pair learning for wireless capsule endoscopy image. In : 2015 IEEE International Conference on Digital Signal Processing (DSP); 2015 Jul 21-24; Singapore. Piscataway (NY): IEEE;2015. p. 595–599.

Article14. Karargyris A, Bourbakis N. Three-dimensional reconstruction of the digestive wall in capsule endoscopy videos using elastic video interpolation. IEEE Trans Med Imaging. 2011; 30:957–971.

Article15. Fan Y, Meng MQ, Li B. 3D reconstruction of wireless capsule endoscopy images. Conf Proc IEEE Eng Med Biol Soc. 2010; 2010:5149–5152.16. Park MG, Yoon JH, Hwang Y. Stereo matching for wireless capsule endoscopy using direct attenuation model. In : 4th International Workshop, Patch-MI 2018, Held in Conjunction with MICCAI 2018; 2018 Sep 20; Granada, Spain. Cham: Springer;2018. p. 48–56.

Article17. Marya N, Karellas A, Foley A, Roychowdhury A, Cave D. Computerized 3-dimensional localization of a video capsule in the abdominal cavity: validation by digital radiography. Gastrointest Endosc. 2014; 79:669–674.

Article18. Shen Y, Guturu PP, Buckles BP. Wireless capsule endoscopy video segmentation using an unsupervised learning approach based on probabilistic latent semantic analysis with scale invariant features. IEEE Trans Inf Technol Biomed. 2012; 16:98–105.19. Zhou R, Li B, Zhu H, Meng MQ. A novel method for capsule endoscopy video automatic segmentation. In : 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems; 2013 Nov 3-7; Tokyo, Japan. Piscataway (NY): IEEE;2013. p. 3096–3101.

Article20. Spyrou E, Iakovidis DK. Video-based measurements for wireless capsule endoscope tracking. Meas Sci Technol. 2014; 25:015002.

Article21. Bao G, Mi L, Pahlavan K. Emulation on motion tracking of endoscopic capsule inside small intestine. In : 14th International Conference on Bioinformatics and Computational Biology; 2013 Jul; Las Vegas (NV), USA.22. Mahmoud N, Cirauqui I, Hostettler A, et al. ORBSLAM-based endoscope tracking and 3D reconstruction. In : Peters T, Guang-Zhong Y, Navab N, editors. Computer-assisted and robotic endoscopy. Cham: Springer International Publishing;2017. p. 72–83.23. Mahmoud N, Hostettler A, Collins T, Soler L, Doignon C, Montiel JMM. SLAM based quasi dense reconstruction for minimally invasive surgery scenes. arXiv:1705.09107.24. Turan M, Almalioglu Y, Araujo H, Konukoglu E, Sitti M. A non-rigid map fusion-based direct SLAM method for endoscopic capsule robots. Int J Intell Robot Appl. 2017; 1:399–409.

Article25. Faigel DO, Baron TH, Adler DG, et al. ASGE guideline: guidelines for credentialing and granting privileges for capsule endoscopy. Gastrointest Endosc. 2005; 61:503–505.

Article26. Zheng Y, Hawkins L, Wolff J, Goloubeva O, Goldberg E. Detection of lesions during capsule endoscopy: physician performance is disappointing. Am J Gastroenterol. 2012; 107:554–560.

Article27. Mamonov AV, Figueiredo IN, Figueiredo PN, Tsai YH. Automated polyp detection in colon capsule endoscopy. IEEE Trans Med Imaging. 2014; 33:1488–1502.

Article28. Kumar R, Zhao Q, Seshamani S, Mullin G, Hager G, Dassopoulos T. Assessment of Crohn’s disease lesions in wireless capsule endoscopy images. IEEE Trans Biomed Eng. 2012; 59:355–362.

Article29. Chen G, Bui TD, Krzyzak A, Krishnan S. Small bowel image classification based on Fourier-Zernike moment features and canonical discriminant analysis. Pattern Recognition and Image Analysis. 2013; 23:211–216.

Article30. Szczypiński P, Klepaczko A, Pazurek M, Daniel P. Texture and color based image segmentation and pathology detection in capsule endoscopy videos. Comput Methods Programs Biomed. 2014; 113:396–411.

Article31. Fu Y, Zhang W, Mandal M, Meng MQ. Computer-aided bleeding detection in WCE video. IEEE J Biomed Health Inform. 2014; 18:636–642.

Article32. Iakovidis DK, Koulaouzidis A. Automatic lesion detection in capsule endoscopy based on color saliency: closer to an essential adjunct for reviewing software. Gastrointest Endosc. 2014; 80:877–883.

Article33. Redmon J, Divvala S, Girshick R, Farhadi A. You only look once: unified, real-time object detection. In : 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016 Jun 27-30; Las Vegas (NV), USA. Piscataway (NY): IEEE;2016. p. 779–788.

Article34. Urban G, Tripathi P, Alkayali T, et al. Deep learning localizes and identifies polyps in real time with 96% accuracy in screening colonoscopy. Gastroenterology. 2018; 155:1069–1078. e8.

Article35. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In : 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016 Jun 27-30; Las Vegas (NV), USA. Piscataway (NY): IEEE;2016. p. 770–778.

Article36. Simonyan K, Zisserman A. Very deep convolutional networks for largescale image recognition. arXiv:1409.1556.37. Zou Y, Li L, Wang Y, Yu J, Li Y, Deng WJ. Classifying digestive organs in wireless capsule endoscopy images based on deep convolutional neural network. In : 2015 IEEE International Conference on Digital Signal Processing (DSP); 2015 Jul 21-24; Singapore. Piscataway (NY): IEEE;2015. p. 1274–1278.

Article38. Jia X, Meng MQ. A deep convolutional neural network for bleeding detection in wireless capsule endoscopy images. In : 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); 2016 Aug 16-20; Orlando (FL), USA. Piscataway (NY): IEEE;2016. p. 639–642.

Article39. Hwang Y, Park J, Lim YJ, Chun HJ. Application of artificial intelligence in capsule endoscopy: where are we now? Clin Endosc. 2018; 51:547–551.

Article

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Improved Capsule Endoscopy Using New Computer Vision Technologies

- As how artificial intelligence is revolutionizing endoscopy

- The Future of Capsule Endoscopy: The Role of Artificial Intelligence and Other Technical Advancements

- Application of Artificial Intelligence in Capsule Endoscopy: Where Are We Now?

- Colon Capsule Endoscopy for Inflammatory Bowel Disease