Healthc Inform Res.

2011 Mar;17(1):24-28. 10.4258/hir.2011.17.1.24.

Evaluation of Co-occurring Terms in Clinical Documents Using Latent Semantic Indexing

- Affiliations

-

- 1Interdisciplinary Program of Bioengineering, College of Engineering, Seoul National University, Seoul, Korea.

- 2Seoul National University Bundang Hospital, Seongnam, Korea.

- 3Department of Biomedical Engineering, College of Medicine, Seoul National University, Seoul, Korea. jinchoi@snu.ac.kr

- KMID: 2166592

- DOI: http://doi.org/10.4258/hir.2011.17.1.24

Abstract

OBJECTIVES

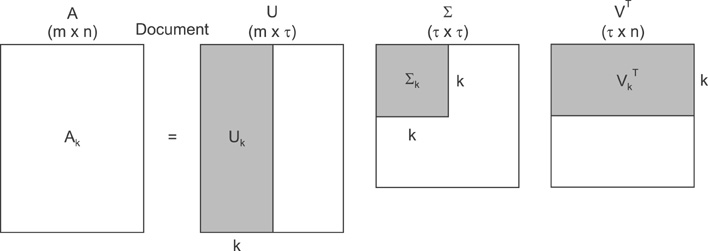

Measurement of similarities between documents is typically influenced by the sparseness of the term-document matrix employed. Latent semantic indexing (LSI) may improve the results of this type of analysis.

METHODS

In this study, LSI was utilized in an attempt to reduce the term vector space of clinical documents and newspaper editorials.

RESULTS

After applying LSI, document similarities were revealed more clearly in clinical documents than editorials. Clinical documents which can be characterized with co-occurring medical terms, various expressions for the same concepts, abbreviations, and typographical errors showed increased improvement with regards to a correlation between co-occurring terms and document similarities.

CONCLUSIONS

Our results showed that LSI can be used effectively to measure similarities in clinical documents. In addition, correlation between the co-occurrence of terms and similarities realized in this study is an important positive feature associated with LSI.

MeSH Terms

Figure

Reference

-

1. Landauer TK. Landauer TK, McNamara DS, Dennis S, Kintsch W, editors. LSA as a theory of meaning. Handbook of latent semantic analysis. 2007. Mahwah (NJ): Lawrence Erlbaum Associates;3–35.2. Deerwester S, Dumais ST, Furnas GW, Landauer TK, Harshman R. Indexing by latent semantic analysis. J Am Soc Inf Sci. 1990. 41:391–407.

Article3. Dumais ST. McNamara DS, Dennis S, Kintsch W, editors. LSA and information retrieval: back to basics. Handbook of latent semantic analysis. 2007. Mahwah (NJ): Lawrence Erlbaum Associates;293–321.4. Hofmann T. Probabilistic latent semantic indexing. 22nd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval. 1999. 1999 Aug 15-19; Berkeley, CA. New York: Association for Computing Machinery;50–57.

Article5. Hofmann T. Unsupervised learning by probabilistic latent semantic analysis. Mach Learn. 2001. 42:177–196.6. Blei D, Ng AY, Jordan MI. Latent dirichlet allocation. J Mach Learn Res. 2003. 3:993–1022.7. Steyvers M, Smyth P, Rosen-Zvi M, Griffiths TL. Probabilistic author-topic models for information discovery. In : Knowledge Discovery and Data Mining 2004; 2004 August 22-25; Seattle, WA. 306–315.8. Wang X, McCallum A. Topics over time: a non-Markov continuous-time model of topical trends. 2006. In : Knowledge Discovery and Data Mining 2006; 2006 August 20-23; Philadelphia, PA. New York: Association for Computing Machinery;–.9. Blei DM, Lafferty JD. Dynamic topic models. In : The 23rd International Conference of Machine Learning; 2006 June 25-29; Pittsburgh, PA.10. Manning CD, Raghavan P, Schütze H. Introduction to information retrieval. 2008. 1st ed. New York: Cambridge University Press;378–384.11. Agglutinative language. Wikipedia. Wikipedia. cited at 2010 Feb 19. Available from: http://en.wikipedia.org/wiki/Agglutinative_language.

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- A Study of Effective Unified Medical Language System Concept Indexing in Radiology Reports

- A Korean MeSH Keyword Suggestion System for Medical Paper Indexing

- Clinical Data Element Ontology for Unified Indexing and Retrieval of Data Elements across Multiple Metadata Registries

- Promotion to MEDLINE, indexing with Medical Subject Headings, and open data policy for the Journal of Educational Evaluation for Health Professions

- The Development of Clinical Document Standards for Semantic Interoperability in China