Ann Rehabil Med.

2024 Aug;48(4):271-280. 10.5535/arm.230029.

Extensive Multilabel Classification of Brain MRI Scans for Infarcts Using the Swin UNETR Architecture in Deep Learning Applications

- Affiliations

-

- 1Department of Physical Medicine and Rehabilitation, Seoul Daehyo Rehabilitation Hospital, Yangju, Korea

- 2Department of Emergency Medicine, Pohang SeMyeong Christianity Hospital, Pohang, Korea

- KMID: 2558733

- DOI: http://doi.org/10.5535/arm.230029

Abstract

Objective

To distinguish infarct location and type with the utmost precision using the advantages of the Swin UNEt TRansformers (Swin UNETR) architecture.

Methods

The research employed a two-phase training approach. In the first phase, the Swin UNETR model was trained using the Ischemic Stroke Lesion Segmentation Challenge (ISLES) 2022 dataset, which included cases of acute and subacute infarcts. The second phase involved training with data from 309 patients. The 110 categories result from classifying infarcts based on 22 specific brain regions. Each region is divided into right and left sides, and each side includes four types of infarcts (acute, acute lacunar, subacute, subacute lacunar). The unique architecture of Swin UNETR, integrating elements of both the transformer and u-net designs with a hierarchical transformer computed with shifted windows, played a crucial role in the study.

Results

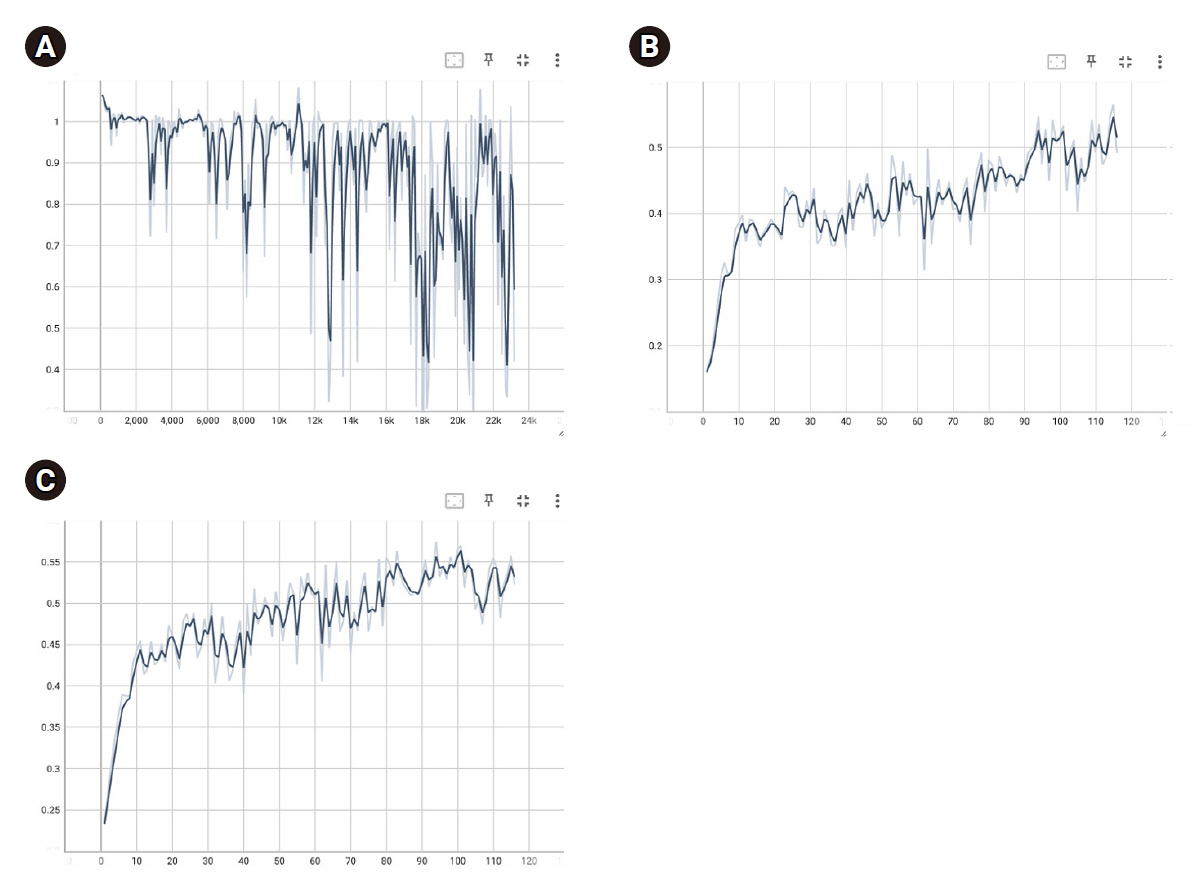

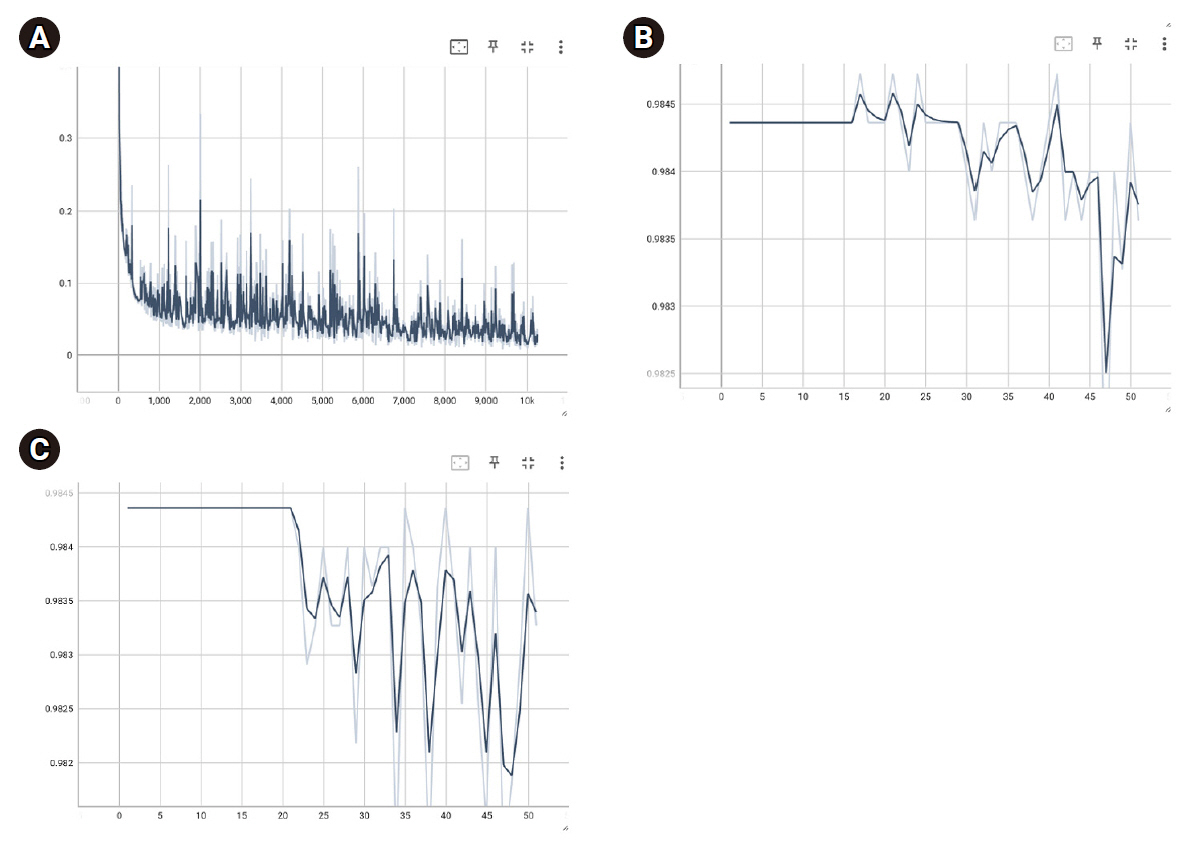

During Swin UNETR training with the ISLES 2022 dataset, batch loss decreased to 0.8885±0.1897, with training and validation dice scores reaching 0.4224±0.0710 and 0.4827±0.0607, respectively. The optimal model weight had a validation dice score of 0.5747. In the patient data model, batch loss decreased to 0.0565±0.0427, with final training and validation accuracies of 0.9842±0.0005 and 0.9837±0.0010.

Conclusion

The results of this study surpass the accuracy of similar studies, but they involve the issue of overfitting, highlighting the need for future efforts to improve generalizability. Such detailed classifications could significantly aid physicians in diagnosing infarcts in clinical settings.

Keyword

Figure

Reference

-

1. McCulloch WS, Pitts W. A logical calculus of the ideas immanent in nervous activity. Bull Math Biol. 1943; 5:115–33.

Article2. Rosenblatt F. The perceptron: a probabilistic model for information storage and organization (1958). In : Lewis HR, editor. Ideas that created the future: classic papers of computer science. The MIT Press;2021. p. 183.3. Minsky M, Papert S. (1969) Marvin Minsky and Seymour Papert, Perceptrons, Cambridge, MA: MIT Press, Introduction, pp. 1-20, and p. 73 (figure 5.1). In : Anderson JA, Rosenfeld E, editors. Neurocomputing, volume 1: foundations of research. The MIT Press;1988. p. 675.4. Rumelhart DE, Hinton GE, Williams RJ. Learning internal representations by error propagation. In : Rumelhart DE, McClelland JL, editors. Parallel distributed processing, volume 1: explorations in the microstructure of cognition: foundations. The MIT Press;1986. p. 676–9.5. Hatamizadeh A, Tang Y, Nath V, Yang D, Myronenko A, Landman B, et al. UNETR: transformers for 3D medical image segmentation. Proceedings of the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV); 2022 Jan 3-8; Waikoloa (HI), USA. New York City (NY): IEEE; 2022.

Article6. LeCun Y, Kavukcuoglu K, Farabet C. Convolutional networks and applications in vision. Proceedings of the 2010 IEEE International Symposium on Circuits and Systems; 2010 May 30-Jun 2; Paris, France. New York City (NY): IEEE; 2010.

Article7. Hatamizadeh A, Nath V, Tang Y, Yang D, Roth HR, Xu D. Swin UNETR: Swin transformers for semantic segmentation of brain tumors in MRI images. Proceedings of the 7th International MICCAI Brainlesion Workshop, BrainLes 2021; 2021 Sep 27; Virtual Event. Cham: Springer; 2022.8. Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, MICCAI 2015; 2015 Oct 5-9; Munich, Germany. Cham: Springer; 2015.

Article9. Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, et al. Attention is all you need. Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017); 2017 Dec 4-9; Long Beach (CA), USA. Red Hook (NY): Curran Associates, Inc.; 2017.10. Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, et al. Swin transformer: hierarchical vision transformer using shifted windows. Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV); 2021 Oct 10-17; Montreal (QC), Canada. New York City (NY): IEEE; 2022.

Article11. Etherton MR, Rost NS, Wu O. Infarct topography and functional outcomes. J Cereb Blood Flow Metab. 2018; 38:1517–32.

Article12. Bates E, Wilson SM, Saygin AP, Dick F, Sereno MI, Knight RT, et al. Voxel-based lesion-symptom mapping. Nat Neurosci. 2003; 6:448–50.

Article13. Lo R, Gitelman D, Levy R, Hulvershorn J, Parrish T. Identification of critical areas for motor function recovery in chronic stroke subjects using voxel-based lesion symptom mapping. Neuroimage. 2010; 49:9–18.

Article14. Goldenberg G, Spatt J. Influence of size and site of cerebral lesions on spontaneous recovery of aphasia and on success of language therapy. Brain Lang. 1994; 47:684–98.

Article15. Munsch F, Sagnier S, Asselineau J, Bigourdan A, Guttmann CR, Debruxelles S, et al. Stroke location is an independent predictor of cognitive outcome. Stroke. 2016; 47:66–73.

Article16. Google Colab [Internet]. Google [cited 2023 Dec 7]. Available from: https://colab.google.17. Hernandez Petzsche MR, de la Rosa E, Hanning U, Wiest R, Valenzuela W, Reyes M, et al. ISLES 2022: a multi-center magnetic resonance imaging stroke lesion segmentation dataset. Sci Data. 2022; 9:762.

Article18. PyTorch-Ignite [Internet]. PyTorch-Ignite Contributors [cited 2023 Dec 7]. Available from: https://pytorch-ignite.ai.19. MONAI [Internet]. MONAI Consortium [cited 2023 Dec 7]. Available from: https://monai.io.20. Project-MONAI / tutorials [Internet]. GitHub, Inc.; 2022 [cited 2023 Dec 7]. Available from: https://github.com/Project-MONAI/tutorials/blob/main/3d_segmentation/swin_unetr_btcv_segmentation_3d.ipynb.21. Weights [Internet]. GitHub, Inc. [cited 2023 Dec 7]. Available from: https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/model_swinvit.pt).22. Loshchilov I, Hutter F. Decoupled weight decay regularization. arXiv:1711.05101 [Preprint]. 2019 [cited 2023 Dec 7]. Available from: https://doi.org/10.48550/arXiv.1711.05101.23. EarlyStopping [Internet]. PyTorch-Ignite Contributors [cited 2023 Dec 7]. Available from: https://pytorch.org/ignite/generated/ignite.handlers.early_stopping.EarlyStopping.html.24. Loss functions [Internet]. MONAI Consortium [cited 2023 Dec 7]. Available from: https://docs.monai.io/en/stable/losses.html.25. Wang P, Chung ACS. Focal dice loss and image dilation for brain tumor segmentation. Proceedings of the 4th International Workshop on Deep Learning in Medical Image Analysis, DLMIA 2018, and the 8th International Workshop on Multimodal Learning for Clinical Decision Support, ML-CDS 2018; 2018 Sep 20; Granada, Spain. Cham: Springer; 2018.

Article26. Event handlers. [Internet]. MONAI Consortium [cited 2023 Dec 7]. Available from: https://docs.monai.io/en/stable/handlers.html.27. Mangla R, Kolar B, Almast J, Ekholm SE. Border zone infarcts: pathophysiologic and imaging characteristics. Radiographics. 2011; 31:1201–14.

Article28. Fisher CM. Lacunes: small, deep cerebral infarcts. Neurology. 1998; 50:841–841-a.

Article29. Ho Y, Wookey S. The real-world-weight cross-entropy loss function: modeling the costs of mislabeling. IEEE Access. 2019; 8:4806–13.

Article30. Accuracy [Internet]. PyTorch-Ignite Contributors [cited 2023 Dec 7]. Available from: https://pytorch.org/ignite/generated/ignite.metrics.Accuracy.html.31. Subudhi A, Sahoo S, Biswal P, Sabut S. Segmentation and classification of ischemic stroke using optimized features in brain MRI. Biomed Eng Appl Basis Commun. 2018; 30:1850011.

Article32. Cetinoglu YK, Koska IO, Uluc ME, Gelal MF. Detection and vascular territorial classification of stroke on diffusion-weighted MRI by deep learning. Eur J Radiol. 2021; 145:110050.

Article33. Myronenko A. 3D MRI brain tumor segmentation using autoencoder regularization. Proceedings of the 4th International MICCAI Brainlesion Workshop, BrainLes 2018; 2018 Sep 16; Granada, Spain. Cham: Springer; 2019.

Article34. Isensee F, Jaeger PF, Kohl SAA, Petersen J, Maier-Hein KH. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat Methods. 2021; 18:203–11.

Article35. Wang W, Chen C, Ding M, Yu H, Zha S, Li J. TransBTS: multimodal brain tumor segmentation using transformer. Proceedings of the 24th International Conference on Medical Image Computing and Computer-Assisted Intervention, MICCAI 2021; 2021 Sep 27-Oct 1; Strasbourg, France. Cham: Springer; 2021.

Article36. ImageNet [Internet]. Stanford Vision Lab, Stanford University, Princeton University [cited 2023 Dec 7]. Available from: https://www.image-net.org/index.php.37. Wardlaw JM, Mair G, von Kummer R, Williams MC, Li W, Storkey AJ, et al. Accuracy of automated computer-aided diagnosis for stroke imaging: a critical evaluation of current evidence. Stroke. 2022; 53:2393–403.

Article38. Mokli Y, Pfaff J, Dos Santos DP, Herweh C, Nagel S. Computer-aided imaging analysis in acute ischemic stroke - background and clinical applications. Neurol Res Pract. 2019; 1:23.

Article

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Development of automatic organ segmentation based on positron-emission tomography analysis system using Swin UNETR in breast cancer patients in Korea

- Current status of deep learning applications in abdominal ultrasonography

- Deep Learning for Medical Image Analysis: Applications to Computed Tomography and Magnetic Resonance Imaging

- Deep Learning Applications in Perfusion MRI: Recent Advances and Current Challenges

- Deep Learning in Dental Radiographic Imaging