J Educ Eval Health Prof.

2016;13:6. 10.3352/jeehp.2016.13.6.

Calibrating the Medical Council of Canada's Qualifying Examination Part I using an integrated item response theory framework: a comparison of models and designs

- Affiliations

-

- 1Research & Development, Medical Council of Canada, Ottawa, Ontario, Canada. adechamplain@mcc.ca

- 2Educational Research Methodology Department, School of Education, University of North Carolina at Greensboro, Greensboro, North Carolina, USA.

- KMID: 2413755

- DOI: http://doi.org/10.3352/jeehp.2016.13.6

Abstract

- PURPOSE

The aim of this research was to compare different methods of calibrating multiple choice question (MCQ) and clinical decision making (CDM) components for the Medical Council of Canada's Qualifying Examination Part I (MCCQEI) based on item response theory.

METHODS

Our data consisted of test results from 8,213 first time applicants to MCCQEI in spring and fall 2010 and 2011 test administrations. The data set contained several thousand multiple choice items and several hundred CDM cases. Four dichotomous calibrations were run using BILOG-MG 3.0. All 3 mixed item format (dichotomous MCQ responses and polytomous CDM case scores) calibrations were conducted using PARSCALE 4.

RESULTS

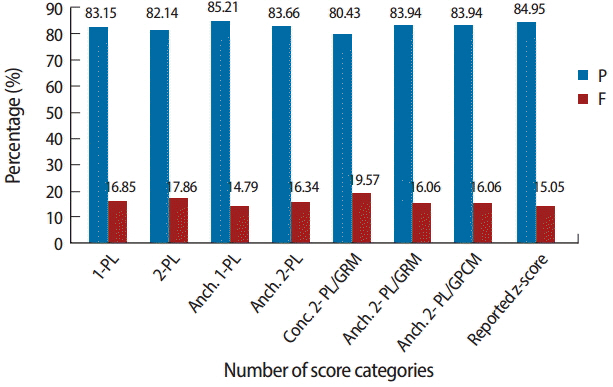

The 2-PL model had identical numbers of items with chi-square values at or below a Type I error rate of 0.01 (83/3,499 or 0.02). In all 3 polytomous models, whether the MCQs were either anchored or concurrently run with the CDM cases, results suggest very poor fit. All IRT abilities estimated from dichotomous calibration designs correlated very highly with each other. IRT-based pass-fail rates were extremely similar, not only across calibration designs and methods, but also with regard to the actual reported decision to candidates. The largest difference noted in pass rates was 4.78%, which occurred between the mixed format concurrent 2-PL graded response model (pass rate= 80.43%) and the dichotomous anchored 1-PL calibrations (pass rate= 85.21%).

CONCLUSION

Simpler calibration designs with dichotomized items should be implemented. The dichotomous calibrations provided better fit of the item response matrix than more complex, polytomous calibrations.

Figure

Reference

-

References

1. Zimowski M, Muraki E, Mislevy R, Bock D. BILOG-MG 3. Multiple-group IRT analysis and test maintenance for binary items. Chicago (IL): Scientific Software International, Inc;2003.2. Muraki E. PARSCALE 4: IRT based test scoring and item analysis for graded items and rating scales. Chicago (IL): Scientific Software International, Inc;2003.3. Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1997; 33:159–174.

Article

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Best fit model of exploratory and confirmatory factor analysis to the 2010 Medical Council of Canada's Qualifying Examination Part I clinical decision making cases

- Application of Computerized Adaptive Testing in Medical Education

- The Medical Council of Canada Qualifying Examination Part II

- Comparison of item analysis results of Korean Medical Licensing Examination according to classical test theory and item response theory

- The assessment of clinical competence : The experience of the Medical Council of Canada