Observer Agreement Using the ACR Breast Imaging Reporting and Data System (BI-RADS)-Ultrasound, First Edition (2003)

- Affiliations

-

- 1Department of Radiology, Our Lady of Mercy Hospital, College of Medicine, The Catholic University of Korea, Korea. heerad@catholic.ac.kr

- 2Department of Radiology, Kangnam St. Mary's Hospital, College of Medicine, The Catholic University of Korea, Korea.

- 3Department of Preventive Medicine, College of Medicine, The Catholic University of Korea, Korea.

- 4Department of Radiology, St. Vincent's Hospital, College of Medicine, The Catholic University of Korea, Korea.

- 5Department of Radiology, St. Paul's Hospital, College of Medicine, The Catholic University of Korea, Korea.

- 6Department of Radiology, St. Mary's Hospital, College of Medicine, The Catholic University of Korea, Korea.

- 7Department of Radiology, Holy Family Hospital, College of Medicine, The Catholic University of Korea, Korea.

- KMID: 1734288

- DOI: http://doi.org/10.3348/kjr.2007.8.5.397

Abstract

OBJECTIVE

This study aims to evaluate the degree of inter- and intraobserver agreement when characterizing breast abnormalities using the Breast Imaging Reporting and Data System (BI-RADS)-ultrasound (US) lexicon, as defined by the American College of Radiology (ACR). MATERIALS AND METHODS: Two hundred ninety three female patients with 314 lesions underwent US-guided biopsies at one facility during a two-year period. Static sonographic images of each breast lesion were acquired and reviewed by four radiologists with expertise in breast imaging. Each radiologist independently evaluated all cases and described the mass according to BI-RADS-US. To assess intraobserver variability, one of the four radiologists reassessed all of the cases one month after the initial evaluation. Inter- and intraobserver variabilities were determined using Cohen's kappa (k) statistics. RESULTS: The greatest degree of reliability for a descriptor was found for mass orientation (k = 0.61) and the least concordance of fair was found for the mass margin (k = 0.32) and echo pattern (k = 0.36). Others descriptive terms: shape, lesion boundary and posterior features (k = 0.42, k = 0.55 and k = 0.53, respectively) and the final assessment (k = 0.51) demonstrated only moderate levels of agreement. A substantial degree of intraobserver agreement was found when classifying all morphologic features: shape, orientation, margin, lesion boundary, echo pattern and posterior feature (k = 0.73, k = 0.68, k = 0.64, 0.68, k = 0.65 and k = 0.64, respectively) and rendering final assessments (k = 0.65). CONCLUSION: Although BI-RADS-US was created to achieve a consensus among radiologists when describing breast abnormalities, our study shows substantial intraobserver agreement but only moderate interobserver agreement in the mass description and final assessment of breast abnormalities according to its use. A better agreement will ultimately require specialized education, as well as self-auditing practice tests.

MeSH Terms

-

Adenocarcinoma/classification/*diagnosis

Adenocarcinoma, Mucinous/classification/*diagnosis

Adolescent

Adult

Aged

Aged, 80 and over

Biopsy

Breast Neoplasms/classification/*diagnosis

Carcinoma, Ductal, Breast/classification/*diagnosis

Carcinoma, Intraductal, Noninfiltrating/classification/*diagnosis

Female

Follow-Up Studies

Humans

Middle Aged

Observer Variation

Predictive Value of Tests

Radiology

Reproducibility of Results

Sensitivity and Specificity

Societies, Medical

Terminology as Topic

Ultrasonography, Doppler, Color/statistics & numerical data

Ultrasonography, Mammary/*statistics & numerical data

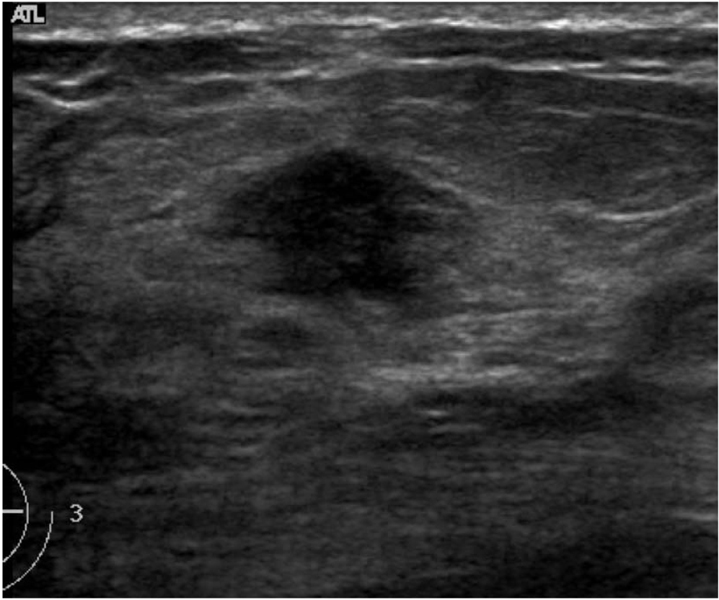

Figure

Cited by 3 articles

-

Histological Analysis of Benign Breast Imaging Reporting and Data System Categories 4c and 5 Breast Lesions in Imaging Study

Min Jung Kim, Dokyung Kim, WooHee Jung, Ja Seung Koo

Yonsei Med J. 2012;53(6):1203-1210. doi: 10.3349/ymj.2012.53.6.1203.Screening Ultrasound in Women with Negative Mammography: Outcome Analysis

Ji-Young Hwang, Boo-Kyung Han, Eun Young Ko, Jung Hee Shin, Soo Yeon Hahn, Mee Young Nam

Yonsei Med J. 2015;56(5):1352-1358. doi: 10.3349/ymj.2015.56.5.1352.Radiology Residents’ Comprehension of the Breast Imaging Reporting and Data System: The Ultrasound Lexicon and Final Assessment Category

Sun Hye Jeong, Yun Ho Roh, Jung Hyun Yoon, Eun Hye Lee, Sung Hun Kim, Ji Hyun Youk, You Me Kim, Min Jung Kim

J Korean Soc Radiol. 2017;77(1):19-26. doi: 10.3348/jksr.2017.77.1.19.

Reference

-

1. American College of Radiology. Breast imaging reporting and data system, Breast imaging atlas. 2003. forth ed. Reston, VA: American College of Radiology.2. Hong AS, Rosen EL, Soo MS, Baker JA. BI-RADS for sonography: positive and negative predictive values of sonographic features. AJR Am J Roentgenol. 2005. 184:1260–1265.3. Mendelson EB, Berg WA, Merritt CR. Toward a standardized breast ultrasound lexicon, BI-RADS: ultrasound. Semin Roentgenol. 2001. 36:217–225.4. Ciccone G, Vineis P, Frigerio A, Segnan N. Inter-observer and intra-observer variability of mammogram interpretation: a field study. Eur J Cancer. 1992. 28A:1054–1058.5. Elmore JG, Wells CK, Lee CH, Howard DH, Feinstein AR. Variability in radiologists' interpretation of mammograms. N Engl J Med. 1994. 331:1493–1499.6. Vineis P, Sinistrero G, Temporelli A, Azzoni L, Bigo A, Burke P, et al. Inter-observer variability in the interpretation of mammograms. Tumori. 1988. 74:275–279.7. Baker JA, Kornguth PJ, Floyd CE Jr. Breast imaging reporting and data system standardized mammography lexicon: observer variability in lesion description. AJR Am J Roentgenol. 1996. 166:773–778.8. Skaane P, Engedal K, Skjennald A. Interobserver variation in the interpretation of breast imaging. Acta Radiol. 1997. 38:497–502.9. Baker JA, Kornguth PJ, Soo MS, Walsh R, Mengoni P. Sonography of solid breast lesions: observer variability of lesion description and assessment. AJR Am J Roentgenol. 1999. 172:1621–1625.10. Stavros AT, Thickman D, Rapp CL, Dennis MA, Parker SH, Sisney GA. Solid breast nodules: use of sonography to distinguish between benign and malignant lesions. Radiology. 1995. 196:123–134.11. Stavros AT. Breast Ultrasound. 2004. 1st ed. Philadelphia: Lippincott William & Wilkins;455–527.12. Lazarus E, Mainiero MB, Schepps B, Koelliker SL, Livingston LS. BI-RADS lexicon for US and mammography: interobserver variability and positive predictive value. Radiology. 2006. 239:385–391.13. Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977. 33:159–174.14. Svanholm H, Starklint H, Gundersen HJ, Fabricius J, Barlebo J, Olsen S. Reproducibility of histomorphologic diagnoses with special reference to the kappa statistic. APMIS. 1989. 97:689–698.

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Breast Imaging Reporting and Data System (BI-RADS): Advantages and Limitations

- Practical and illustrated summary of updated BI-RADS for ultrasonography

- Beyond BI-RADS: Nonmass Abnormalities on Breast Ultrasound

- Reliability of automated versus handheld breast ultrasound examinations of suspicious breast masses

- Usefulness of ultrasound elastography in reducing the number of Breast Imaging Reporting and Data System category 3 lesions on ultrasonography