Clin Exp Otorhinolaryngol.

2023 Aug;16(3):217-224. 10.21053/ceo.2023.00206.

Feasibility of Virtual Reality-Based Auditory Localization Training With Binaurally Recorded Auditory Stimuli for Patients With Single-Sided Deafness

- Affiliations

-

- 1Laboratory of Brain and Cognitive Sciences for Convergence Medicine, Hallym University College of Medicine, Anyang, Korea

- 2Ear and Interaction Center, Doheun Institute for Digital Innovation in Medicine (D.I.D.I.M.), Hallym University Medical Center, Anyang, Korea

- 3Department of Otorhinolaryngology-Head and Neck Surgery, Hallym University College of Medicine, Chuncheon, Korea

- KMID: 2545260

- DOI: http://doi.org/10.21053/ceo.2023.00206

Abstract

Objectives

. To train participants to localize sound using virtual reality (VR) technology, appropriate auditory stimuli that contain accurate spatial cues are essential. The generic head-related transfer function that grounds the programmed spatial audio in VR does not reflect individual variation in monaural spatial cues, which is critical for auditory spatial perception in patients with single-sided deafness (SSD). As binaural difference cues are unavailable, auditory spatial perception is a typical problem in the SSD population and warrants intervention. This study assessed the applicability of binaurally recorded auditory stimuli in VR-based training for sound localization in SSD patients.

Methods

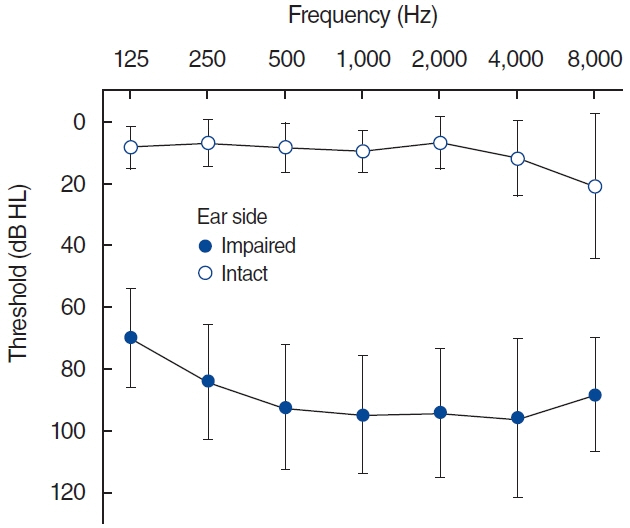

. Sixteen subjects with SSD and 38 normal-hearing (NH) controls underwent VR-based training for sound localization and were assessed 3 weeks after completing training. The VR program incorporated prerecorded auditory stimuli created individually in the SSD group and over an anthropometric model in the NH group.

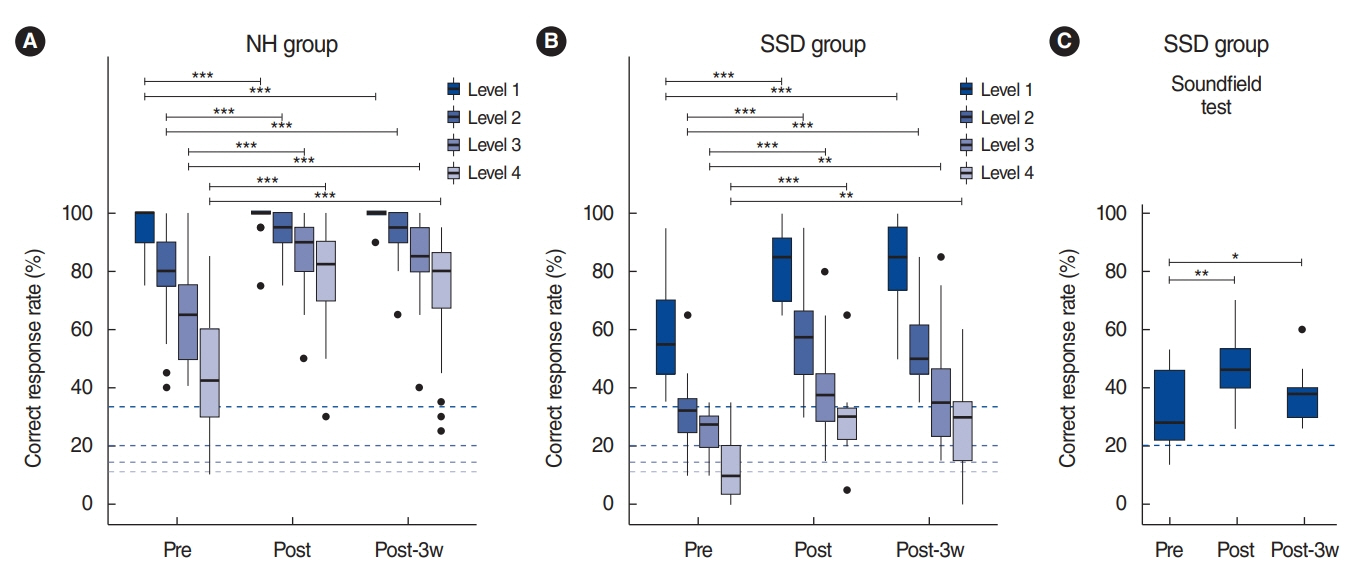

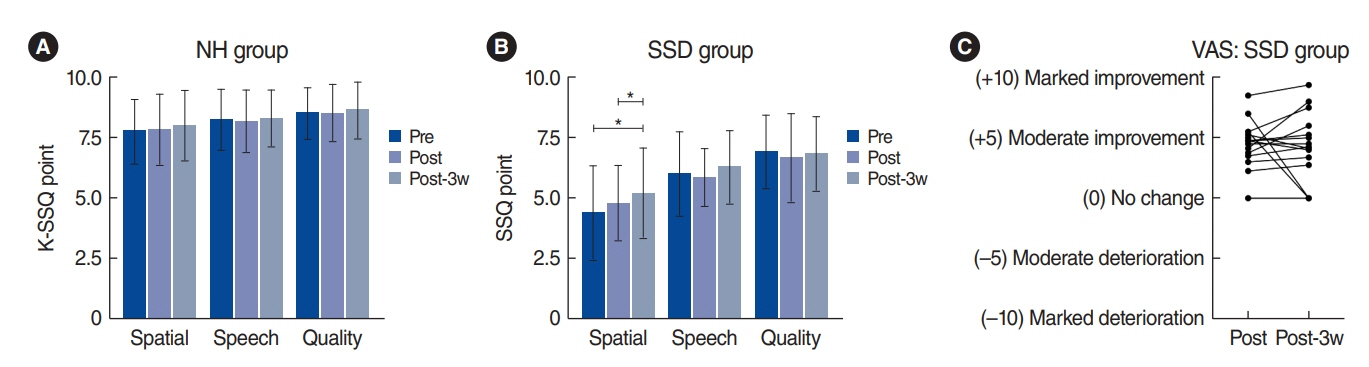

Results

. Sound localization performance revealed significant improvements in both groups after training, with retained benefits lasting for an additional 3 weeks. Subjective improvements in spatial hearing were confirmed in the SSD group.

Conclusion

. By examining individuals with SSD and NH, VR-based training for sound localization that used binaurally recorded stimuli, measured individually, was found to be effective and beneficial. Furthermore, VR-based training does not require sophisticated instruments or setups. These results suggest that this technique represents a new therapeutic treatment for impaired sound localization.

Figure

Reference

-

1. Firszt JB, Reeder RM, Holden LK. Unilateral hearing loss: understanding speech recognition and localization variability-implications for cochlear implant candidacy. Ear Hear. 2017; Mar/Apr. 38(2):159–73.2. Luntz M, Brodsky A, Watad W, Weiss H, Tamir A, Pratt H. Sound localization in patients with unilateral cochlear implants. Cochlear Implants Int. 2005; Mar. 6(1):1–9.3. Kuk F, Keenan DM, Lau C, Crose B, Schumacher J. Evaluation of a localization training program for hearing impaired listeners. Ear Hear. 2014; Nov-Dec. 35(6):652–66.4. Mendonca C, Campos G, Dias P, Santos JA. Learning auditory space: generalization and long-term effects. PLoS One. 2013; Oct. 8(10):e77900.5. Steadman MA, Kim C, Lestang JH, Goodman DF, Picinali L. Short-term effects of sound localization training in virtual reality. Sci Rep. 2019; Dec. 9(1):18284.6. Hanenberg C, Schluter MC, Getzmann S, Lewald J. Short-term audiovisual spatial training enhances electrophysiological correlates of auditory selective spatial attention. Front Neurosci. 2021; Jul. 15:645702.7. Hofman PM, Van Riswick JG, Van Opstal AJ. Relearning sound localization with new ears. Nat Neurosci. 1998; Sep. 1(5):417–21.8. Yu F, Li H, Zhou X, Tang X, Galvin Iii JJ, Fu QJ, et al. Effects of training on lateralization for simulations of cochlear implants and single-sided deafness. Front Hum Neurosci. 2018; Jul. 12:287.9. Zonooz B, Van Opstal AJ. Differential adaptation in azimuth and elevation to acute monaural spatial hearing after training with visual feedback. eNeuro. 2019; Nov. 6(6):1–18.10. Cai Y, Chen G, Zhong X, Yu G, Mo H, Jiang J, et al. Influence of audiovisual training on horizontal sound localization and its related ERP response. Front Hum Neurosci. 2018. Oct. 6(23):12–423.11. Kumpik DP, Campbell C, Schnupp JW, King AJ. Re-weighting of sound localization cues by audiovisual training. Front Neurosci. 2019; Nov. 13:1164.12. Jenny C, Reuter C. Usability of individualized head-related transfer functions in virtual reality: empirical study with perceptual attributes in sagittal plane sound localization. JMIR Serious Games. 2020; Sep. 8(3):e17576.13. Stitt P, Picinali L, Katz BF. Auditory accommodation to poorly matched non-individual spectral localization cues through active learning. Sci Rep. 2019; Jan. 9(1):1063.14. Agterberg MJ, Hol MK, Van Wanrooij MM, Van Opstal AJ, Snik AF. Single-sided deafness and directional hearing: contribution of spectral cues and high-frequency hearing loss in the hearing ear. Front Neurosci. 2014; Jul. 8:188.15. Kumpik DP, King AJ. A review of the effects of unilateral hearing loss on spatial hearing. Hear Res. 2019; Feb. 372:17–28.16. Kulkarni A, Colburn HS. Role of spectral detail in sound-source localization. Nature. 1998; Dec. 396(6713):747–9.17. Van de Heyning P, Tavora-Vieira D, Mertens G, Van Rompaey V, Rajan GP, Muller J, et al. Towards a unified testing framework for single-sided deafness studies: a consensus paper. Audiol Neurootol. 2016; 21(6):391–8.18. Kim BJ, An YH, Choi JW, Park MK, Ahn JH, Lee SH, et al. Standardization for a Korean version of the Speech, Spatial and Qualities of Hearing Scale: study of validity and reliability. Korean J Otorhinolaryngol-Head Neck Surg. 2017; Jun. 60(6):279–94.19. R Core Team. R: a language and environment for statistical computing [Internet]. R Foundation for Statistical Computing;2021. [cited 2023 Jun 1]. Available from: https://www.R-project.org/.20. Kassambara A. Rstatix: pipe-friendly framework for basic statistical tests [Internet]. R Foundation for Statistical Computing;2023. [cited 2023 Jun 1]. Available from: https://CRAN.R-project.org/package=rstatix.21. Bates D, Machler M, Bolker B, Walker S. Fitting linear mixed-effects models using lme4. J Stat Softw. 2015; Oct. 67(1):1–48.22. Lenth RV, Bolker B, Buerkner P, Gine-Vazquez I, Herve M, Jung M, et al. Emmeans: estimated marginal means, aka least-squares means [Internet]. R Foundation for Statistical Computing;2023. [cited 2023 Jun 1]. Available from: https://CRAN.R-project.org/package=emmeans.23. Selker R, Love J, Dropmann D, Moreno V. jmv: The ‘jamovi’ analyses [Internet]. R Foundation for Statistical Computing;2022. [cited 2023 Jun 1]. Available from: https://CRAN.R-project.org/package=jmv.24. Kim JH, Shim L, Bahng J, Lee HJ. Proficiency in using level cue for sound localization is related to the auditory cortical structure in patients with single-sided deafness. Front Neurosci. 2021; Oct. 15:749824.25. Van Wanrooij MM, Van Opstal AJ. Sound localization under perturbed binaural hearing. J Neurophysiol. 2007; Jan. 97(1):715–26.26. Derey K, Valente G, de Gelder B, Formisano E. Opponent coding of sound location (azimuth) in planum temporale is robust to sound-level variations. Cereb Cortex. 2016; Jan. 26(1):450–64.27. Stream. Introducing stream audio [Internet]. Valve Corporation;2023. [cited 2023 Apr 1]. Available from: https://steamcommunity.com/games/596420/announcements/detail/521693426582988261.28. Braren HS, Fels J. Towards child-appropriate virtual acoustic environments: a database of high-resolution HRTF measurements and 3D-scans of children. Int J Environ Res Public Health. 2021; Dec. 19(1):324.29. Valzolgher C, Verdelet G, Salemme R, Lombardi L, Gaveau V, Farne A, et al. Reaching to sounds in virtual reality: a multisensory-motor approach to promote adaptation to altered auditory cues. Neuropsychologia. 2020; Dec. 149:107665.30. Parseihian G, Katz BF. Rapid head-related transfer function adaptation using a virtual auditory environment. J Acoust Soc Am. 2012; Apr. 131(4):2948–57.

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Mild Traumatic Brain Injury: Long-Term Follow-Up of Central Auditory Processing After Auditory Training

- The Effects of Auditory Short-Term Training in Passive Oddball Paradigm with Novel Stimuli

- Effects of Spatial Training Paradigms on Auditory Spatial Refinement in Normal-Hearing Listeners: A Comparative Study

- Virtual Reality Technology Trends in Aeromedical Field

- Auditory Rehabilitation - Cochlear Implantation