J Korean Med Sci.

2023 Mar;38(12):e82. 10.3346/jkms.2023.38.e82.

Development of Novel Musical Stimuli to Investigate the Perception of Musical Emotions in Individuals With Hearing Loss

- Affiliations

-

- 1Laboratory of Brain & Cognitive Sciences for Convergence Medicine, Hallym University College of Medicine, Anyang, Korea

- 2Ear and Interaction Center, Doheun Institute for Digital Innovation in Medicine (D.I.D.I.M.), Hallym University Medical Center, Anyang, Korea

- 3Department of Otorhinolaryngology, Hallym University College of Medicine, Chuncheon, Korea

- KMID: 2541035

- DOI: http://doi.org/10.3346/jkms.2023.38.e82

Abstract

- Background

Many studies have examined the perception of musical emotion using excerpts from familiar music that includes highly expressed emotions to classify emotional choices. However, using familiar music to study musical emotions in people with acquired hearing loss could produce ambiguous results as to whether the emotional perception is due to previous experiences or listening to the current musical stimuli. To overcome this limitation, we developed new musical stimuli to study emotional perception without the effects of episodic memory.

Methods

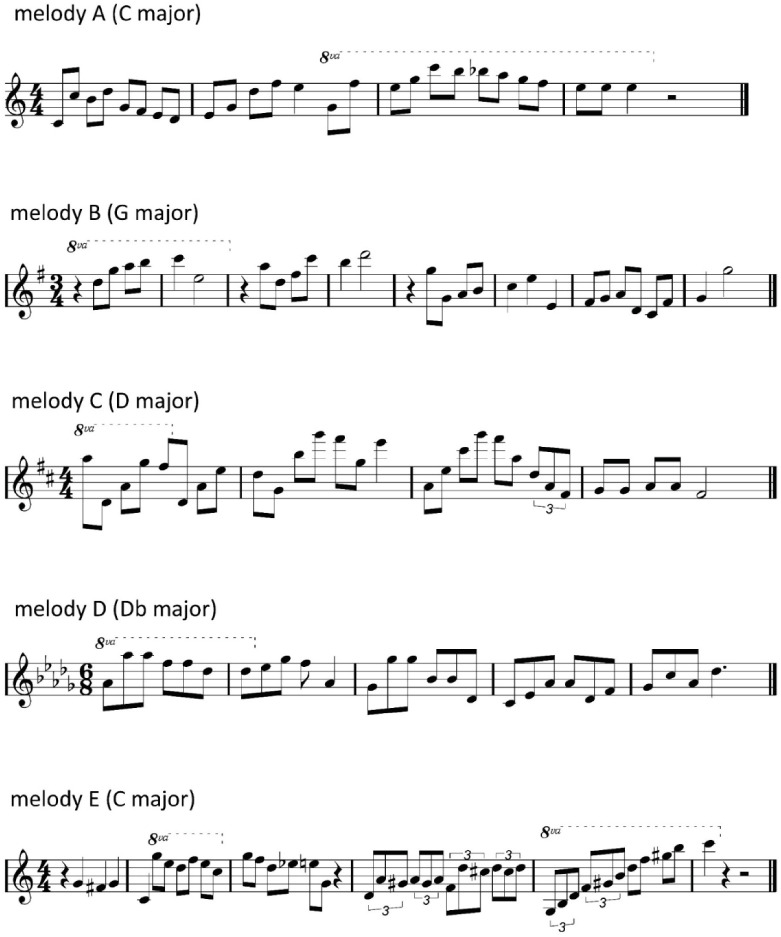

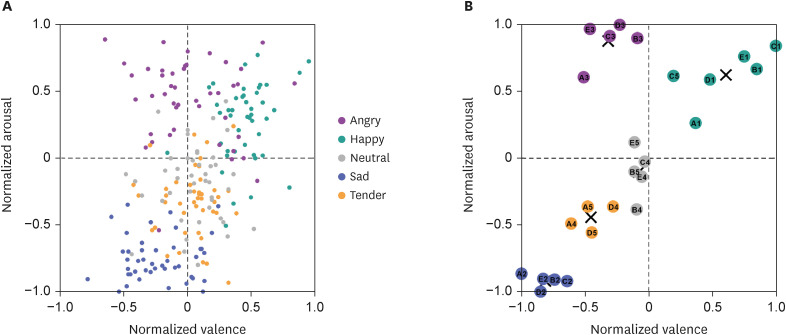

A musician was instructed to compose five melodies with evenly distributed pitches around 1 kHz. The melodies were created to express the emotions of happy, sad, angry, tender, and neutral. To evaluate whether these melodies expressed the intended emotions, two methods were applied. First, we classified the expressed emotions of melodies with selected musical features from 60 features using genetic algorithm-based k-nearest neighbors. Second, forty-four people with normal hearing participated in an online survey regarding the emotional perception of music based on dimensional and discrete approaches to evaluate the musical stimuli set.

Results

Twenty-four selected musical features produced classification for intended emotions with an accuracy of 76%. The results of the online survey in the normal hearing (NH) group showed that the intended emotions were selected significantly more often than the others. K-means clustering analysis revealed that melodies with arousal and valence ratings corresponded to representative quadrants of interest. Additionally, the applicability of the stimuli was tested in 4 individuals with high-frequency hearing loss.

Conclusion

By applying the individuals with NH, the musical stimuli were shown to classify emotions with high accuracy, as expressed. These results confirm that the set of musical stimuli can be used to study the perceived emotion in music, demonstrating the validity of the musical stimuli, independent of innate musical bias such as due to episodic memory. Furthermore, musical stimuli could be helpful for further studying perceived musical emotion in people with hearing loss because of the controlled pitch for each emotion.

Keyword

Figure

Reference

-

1. Singh G, Liskovoi L, Launer S, Russo F. The Emotional Communication in Hearing Questionnaire (EMO-CHeQ): development and evaluation. Ear Hear. 2019; 40(2):260–271. PMID: 29894380.2. Picou EM, Singh G, Goy H, Russo F, Hickson L, Oxenham AJ, et al. Hearing, emotion, amplification, research, and training workshop: current understanding of hearing loss and emotion perception and priorities for future research. Trends Hear. 2018; 22:2331216518803215. PMID: 30270810.3. Hopyan T, Manno FA 3rd, Papsin BC, Gordon KA. Sad and happy emotion discrimination in music by children with cochlear implants. Child Neuropsychol. 2016; 22(3):366–380. PMID: 25562621.4. Pralus A, Belfi A, Hirel C, Lévêque Y, Fornoni L, Bigand E, et al. Recognition of musical emotions and their perceived intensity after unilateral brain damage. Cortex. 2020; 130:78–93. PMID: 32645502.5. Ambert-Dahan E, Giraud AL, Sterkers O, Samson S. Judgment of musical emotions after cochlear implantation in adults with progressive deafness. Front Psychol. 2015; 6:181. PMID: 25814961.6. Giannantonio S, Polonenko MJ, Papsin BC, Paludetti G, Gordon KA. Experience changes how emotion in music is judged: Evidence from children listening with bilateral cochlear implants, bimodal devices, and normal hearing. PLoS One. 2015; 10(8):e0136685. PMID: 26317976.

Article7. Mitchell RL, Kingston RA. Age-related decline in emotional prosody discrimination: acoustic correlates. Exp Psychol. 2014; 61(3):215–223. PMID: 24217140.8. Juslin PN, Västfjäll D. Emotional responses to music: the need to consider underlying mechanisms. Behav Brain Sci. 2008; 31(5):559–575. PMID: 18826699.

Article9. Feltner C, Wallace IF, Kistler CE, Coker-Schwimmer M, Jonas DE. Screening for hearing loss in older adults: updated evidence report and systematic review for the US Preventive Services Task Force. JAMA. 2021; 325(12):1202–1215. PMID: 33755082.10. Eerola T, Vuoskoski JK. A review of music and emotion studies: approaches, emotion modelss, and stimuli. Music Percept. 2013; 30(3):307–340.11. Schellenberg GE, Krysciak AM, Campbell RJ. Perceiving emotion in melody: interactive effects of pitch and rhythm. Music Percept. 2000; 18(2):155–171.12. Lundqvist LO, Carlsson F, Hilmersson P, Juslin PN. Emotional responses to music: experience, expression, and physiology. Psychol Music. 2009; 37(1):61–90.

Article13. Sammler D, Grigutsch M, Fritz T, Koelsch S. Music and emotion: electrophysiological correlates of the processing of pleasant and unpleasant music. Psychophysiology. 2007; 44(2):293–304. PMID: 17343712.14. Vieillard S, Peretz I, Gosselin N, Khalfa S, Gagnon L, Bouchard B. Happy, sad, scary and peaceful musical excerpts for research on emotions. Cogn Emotion. 2008; 22(4):720–752.

Article15. Paquette S, Peretz I, Belin P. The “Musical Emotional Bursts”: a validated set of musical affect bursts to investigate auditory affective processing. Front Psychol. 2013; 4:509. PMID: 23964255.

Article16. Ekman P, Friesen WV. Constants across cultures in the face and emotion. J Pers Soc Psychol. 1971; 17(2):124–129. PMID: 5542557.

Article17. Russell JA. A circumplex model of affect. J Pers Soc Psychol. 1980; 39(6):1161–1178.

Article18. Laurier C, Sordo M, Serr̀a J, Herrera P. Music mood representations from social tags. In : Proceedings of the 10th International Society for Music Information Retrieval Conference, ISMIR 2009; October 26-30, 2009; Kobe, Japan. Montreal, Canada: ISMIR;2009. p. 381–386.19. Lartillot O, Toiviainen P. MIR in Matlab (II): A toolbox for musical feature extraction from audio. In : Proceedings of the 8th International Conference on Music Information Retrieval, ISMIR 2007; September 23-27, 2007; Vienna, Austria. Montreal, Canada: ISMIR;2007. p. 127–130.20. Tzanetakis G, Cook P. Musical genre classification of audio signals using geometric methods. In : Proceedings of the 18th European Signal Processing Conference; August 23-27, 2010; Aalborg, Denmark. Piscataway, NJ, USA: Institute of Electrical and Electronics Engineers (IEEE);2010. p. 497–501.21. Girsang AS, Manalu AS, Huang KW. Feature selection for musical genre classification using a genetic algorithm. Adv Sci Technol Eng Syst J. 2019; 4(2):162–169.22. Farzaneh M. MATLAB Central File Exchange. Feature selection in classification using genetic algorithm. Accessed September 13, 2021. https://www.mathworks.com/matlabcentral/fileexchange/74105-feature-selection-in-classification-using-genetic-algorithm .23. Goldberg D. Genetic Algorithms in Optimization, Search and Machine Learning. Reading, MA, USA: Addison-Wesley Publishing Company;1988.24. Bradley MM, Lang PJ. Measuring emotion: the self-assessment manikin and the semantic differential. J Behav Ther Exp Psychiatry. 1994; 25(1):49–59. PMID: 7962581.

Article25. Yang YH, Chen HH. Music Emotion Recognition. Boca Raton, FL, USA: CRC Press;2011.26. Bland JM, Altman DG. Cronbach’s alpha. BMJ. 1997; 314(7080):572. PMID: 9055718.28. Trehub SE, Endman MW, Thorpe LA. Infants’ perception of timbre: classification of complex tones by spectral structure. J Exp Child Psychol. 1990; 49(2):300–313. PMID: 2332726.29. Goydke KN, Altenmüller E, Möller J, Münte TF. Changes in emotional tone and instrumental timbre are reflected by the mismatch negativity. Brain Res Cogn Brain Res. 2004; 21(3):351–359. PMID: 15511651.

Article30. Chau CJ, Wu B, Horner A. Timbre features and music emotion in plucked string, mallet percussion, and keyboard tones. In : Proceedings of the 40th International Computer Music Conference, ICMC 2014 and 11th Sound and Music Computing Conference, SMC 2014 - Music Technology Meets Philosophy; September 14-20, 2014; Athens, Greece. Ann Arbor, MI, USA: Michigan Publishing;2014. p. 982–989.31. Laurier C, Lartillot O, Eerola T, Toiviaine P. Exploring relationships between audio features and emotion in music. In : Proceedings of the 7th Triennial Conference of European Society for the Cognitive Sciences of Music; August 12-16, 2009; Jyväskylä, Finland. Jyväskylä, Finland: European Society for the Cognitive Sciences of Music;2009. p. 260–264. DOI: 10.3389/conf.neuro.09.2009.02.033.32. Saari P, Eerola T, Lartillot O. Generalizability and simplicity as criteria in feature selection: application to mood classification in music. IEEE Trans Audio Speech Lang Process. 2011; 19(6):1802–1812.33. Juslin PN, Laukka P. Communication of emotions in vocal expression and music performance: different channels, same code? Psychol Bull. 2003; 129(5):770–814. PMID: 12956543.34. Jaquet L, Danuser B, Gomez P. Music and felt emotions: How systematic pitch level variations affect the experience of pleasantness and arousal. Psychol Music. 2012; 42(1):51–70.35. Gabrielsson A, Juslin PN. Exmotional expression in music performance: between the performer’s intention and the listener’s experience. Psychol Music. 1996; 24(1):68–91.36. Darrow AA. The role of music in deaf culture: deaf students’ perception of emotion in music. J Music Ther. 2006; 43(1):2–15. PMID: 16671835.

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Musical Hallucination Caused by Ceftazidime in a Woman with a Hearing Impairment

- Efficacy of Music Training in Hearing Aid and Cochlear Implant Users: A Systematic Review and Meta-Analysis

- The Study on the Identification of Musical Passages for an Emotion Perception Scale for People With Developmental Disabilities

- Musical Aptitude as a Variable in the Assessment of Working Memory and Selective Attention Tasks

- Music Perception Ability of Korean Adult Cochlear Implant Listeners