Yeungnam Univ J Med.

2021 Apr;38(2):118-126. 10.12701/yujm.2020.00423.

A study on evaluator factors affecting physician-patient interaction scores in clinical performance examinations: a single medical school experience

- Affiliations

-

- 1Department of Medical Education, Konyang University College of Medicine, Daejeon, Korea

- 2Department of Preventive Medicine and Public Health, Yeungnam University College of Medicine, Daegu, Korea

- 3Department of Medical Humanities, Yeungnam University College of Medicine, Daegu, Korea

- KMID: 2515185

- DOI: http://doi.org/10.12701/yujm.2020.00423

Abstract

- Background

This study is an analysis of evaluator factors affecting physician-patient interaction (PPI) scores in clinical performance examination (CPX). The purpose of this study was to investigate possible ways to increase the reliability of the CPX evaluation.

Methods

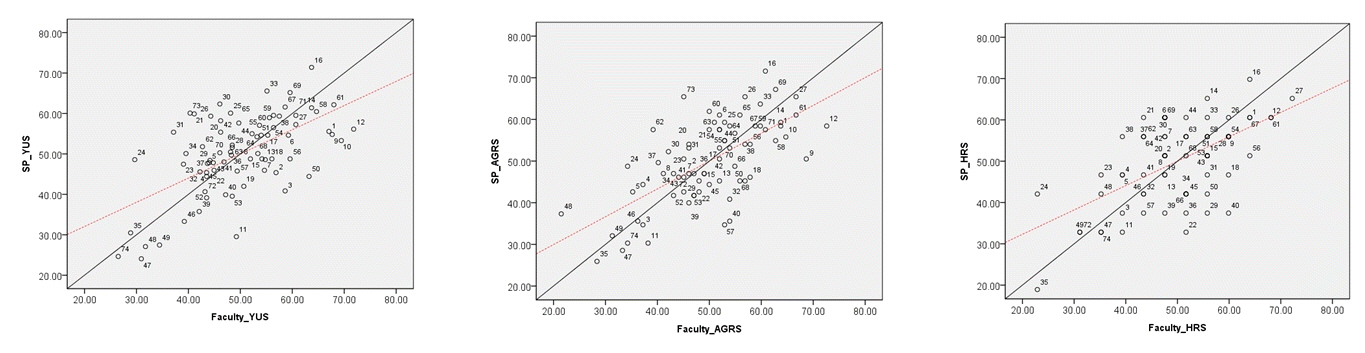

The six-item Yeungnam University Scale (YUS), four-item analytic global rating scale (AGRS), and one-item holistic rating scale (HRS) were used to evaluate student performance in PPI. A total of 72 fourth-year students from Yeungnam University College of Medicine in Korea participated in the evaluation with 32 faculty and 16 standardized patient (SP) raters. The study then examined the differences in scores between types of scale, raters (SP vs. faculty), faculty specialty, evaluation experience, and level of fatigue as time passes.

Results

There were significant differences between faculty and SP scores in all three scales and a significant correlation among raters’ scores. Scores given by raters on items related to their specialty were lower than those given by raters on items out of their specialty. On the YUS and AGRS, there were significant differences based on the faculty’s evaluation experience; scores by raters who had three to ten previous evaluation experiences were lower than others’ scores. There were also significant differences among SP raters on all scales. The correlation between the YUS and AGRS/HRS declined significantly according to the length of evaluation time.

Conclusion

In CPX, PPI score reliability was found to be significantly affected by the evaluator factors as well as the type of scale.

Figure

Reference

-

References

1. Elliot DL, Hickam DH. Evaluation of physical examination skills: reliability of faculty observers and patient instructors. JAMA. 1987; 258:3405–8.

Article2. Tamblyn RM, Klass DJ, Schnabl GK, Kopelow ML. The accuracy of standardized patient presentation. Med Educ. 1991; 25:100–9.

Article3. Vu NV, Marcy MM, Colliver JA, Verhulst SJ, Travis TA, Barrows HS. Standardized (simulated) patients’ accuracy in recording clinical performance check-list items. Med Educ. 1992; 26:99–104.

Article4. Park H, Lee J, Hwang H, Lee J, Choi Y, Kim H, et al. The agreement of checklist recordings between faculties and standardized patients in an objective structured clinical examination (OSCE). Korean J Med Educ. 2003; 15:143–52.

Article5. Kim JJ, Lee KJ, Choi KY, Lee DW. Analysis of the evaluation for clinical performance examination using standardized patients in one medical school. Korean J Med Educ. 2004; 16:51–61.

Article6. Kwon I, Kim N, Lee SN, Eo E, Park H, Lee DH, et al. Comparison of the evaluation results of faculty with those of standardized patients in a clinical performance examination experience. Korean J Med Educ. 2005; 17:173–84.

Article7. Park J, Ko J, Kim S, Yoo H. Faculty observer and standardized patient accuracy in recording examinees’ behaviors using checklists in the clinical performance examination. Korean J Med Educ. 2009; 21:287–97.

Article8. Miller GE. The assessment of clinical skills/competence/performance. Acad Med. 1990; 65(9 Suppl):S63–7.

Article9. De Champlain AF, Margolis MJ, King A, Klass DJ. Standardized patients’ accuracy in recording examinees’ behaviors using checklists. Acad Med. 1997; 72(10 Suppl 1):S85–7.

Article10. Hodges B, McIlroy JH. Analytic global OSCE ratings are sensitive to level of training. Med Educ. 2003; 37:1012–6.

Article11. Scheffer S, Muehlinghaus I, Froehmel A, Ortwein H. Assessing students’ communication skills: validation of a global rating. Adv Health Sci Educ Theory Pract. 2008; 13:583–92.

Article12. Heine N, Garman K, Wallace P, Bartos R, Richards A. An analysis of standardised patient checklist errors and their effect on student scores. Med Educ. 2003; 37:99–104.

Article13. McLaughlin K, Gregor L, Jones A, Coderre S. Can standardized patients replace physicians as OSCE examiners? BMC Med Educ. 2006; 6:12.

Article14. Tamblyn RM, Klass DJ, Schnabl GK, Kopelow ML. Sources of unreliability and bias in standardized‐patient rating. Teach Learn Med. 1991; 3:74–85.

Article15. Domingues RC, Amaral E, Zeferino AM. Global overall rating for assessing clinical competence: what does it really show? Med Educ. 2009; 43:883–6.

Article16. Cunnington JP, Neville AJ, Norman GR. The risks of thoroughness: reliability and validity of global ratings and checklists in an OSCE. Adv Health Sci Educ Theory Pract. 1996; 1:227–33.

Article17. Nielsen DG, Gotzsche O, Eika B. Objective structured assessment of technical competence in transthoracic echocardiography: a validity study in a standardized setting. BMC Med Educ. 2013; 13:47.18. Regehr G, MacRae H, Reznick RK, Szalay D. Comparing the psychometric properties of checklists and global rating scales for assessing performance on an OSCE-format examination. Acad Med. 1998; 73:993–7.

Article19. Cohen R, Rothman AI, Poldre P, Ross J. Validity and generalizability of global ratings in an objective structured clinical examination. Acad Med. 1991; 66:545–8.

Article20. LeBlanc VR, Tabak D, Kneebone R, Nestel D, MacRae H, Moulton CA. Psychometric properties of an integrated assessment of technical and communication skills. Am J Surg. 2009; 197:96–101.

Article21. Hodges B, Regehr G, McNaughton N, Tiberius R, Hanson M. OSCE checklists do not capture increasing levels of expertise. Acad Med. 1999; 74:1129–34.

Article22. Turner K, Bell M, Bays L, Lau C, Lai C, Kendzerska T, et al. Correlation between global rating scale and specific checklist scores for professional behaviour of physical therapy students in practical examinations. Educ Res Int. 2014; 2014:219512.

Article23. Wilkinson TJ, Fontaine S. Patients’ global ratings of student competence: unreliable contamination or gold standard? Med Educ. 2002; 36:1117–21.

Article24. Ilgen JS, Ma IW, Hatala R, Cook DA. A systematic review of validity evidence for checklists versus global rating scales in simulation-based assessment. Med Educ. 2015; 49:161–73.

Article25. Newble D. Techniques for measuring clinical competence: objective structured clinical examinations. Med Educ. 2004; 38:199–203.

Article26. Gerard JM, Kessler DO, Braun C, Mehta R, Scalzo AJ, Auerbach M. Validation of global rating scale and checklist instruments for the infant lumbar puncture procedure. Simul Healthc. 2013; 8:148–54.

Article27. van Luijk SJ, van der Vleuten CPM, van Schelven SM. Observer and student opinions about performance-based tests. In : Bender W, Hiemstra RJ, Scherpbier AJ, Zwierstra RP, editors. Teaching and assessing clinical competence. Groningen: Boekwerk Publications;1990. p. 497–502.28. Klein SP, Stecher BM, Shavelson RJ, McCaffrey D, Ormseth T, Bell RM, et al. Analytic versus holistic scoring of science performance tasks. Appl Meas Educ. 1998; 11:121–37.

Article29. Regehr G, Freeman R, Hodges B, Russell L. Assessing the generalizability of OSCE measures across content domains. Acad Med. 1999; 74:1320–2.

Article30. Choi JY, Jang KS, Choi SH, Hong MS. Validity and reliability of a clinical performance examination using standardized patients. J Korean Acad Nurs. 2008; 38:83–91.

Article

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Association Between Student Performance in a Medical Communication Skills Course and Patient-Physician Interaction Scores on a Clinical Performance Examination

- The relationship between problem-based learning and clinical performance evaluations

- The Effect of Recorded Video Monitoring on Students' Self Reflection of Patient–Physician Interaction

- Effect of Emotional Intelligence on Patient-Physician Interaction Scores of Clinical Performance Examination

- The relationship between medical students' epistemological beliefs and achievement on a clinical performance examination