J Educ Eval Health Prof.

2019;16:10. 10.3352/jeehp.2019.16.10.

Medical students’ thought process while solving problems in 3 different types of clinical assessments in Korea: clinical performance examination, multimedia case-based assessment, and modified essay question

- Affiliations

-

- 1Research and Innovation in Learning Lab, College of Education, The University of Georgia, Athens, GA, USA

- 2Department of Internal Medicine, Inje University College of Medicine, Busan, Korea

- 3Department of Radiology, Inje University College of Medicine, Busan, Korea

- 4Department of Preventive Medicine, Inje University College of Medicine, Busan, Korea

- KMID: 2502155

- DOI: http://doi.org/10.3352/jeehp.2019.16.10

Abstract

- Purpose

This study aimed to explore students’ cognitive patterns while solving clinical problems in 3 different types of assessments—clinical performance examination (CPX), multimedia case-based assessment (CBA), and modified essay question (MEQ)—and thereby to understand how different types of assessments stimulate different patterns of thinking.

Methods

A total of 6 test-performance cases from 2 fourth-year medical students were used in this cross-case study. Data were collected through one-on-one interviews using a stimulated recall protocol where students were shown videos of themselves taking each assessment and asked to elaborate on what they were thinking. The unit of analysis was the smallest phrases or sentences in the participants’ narratives that represented meaningful cognitive occurrences. The narrative data were reorganized chronologically and then analyzed according to the hypothetico-deductive reasoning framework for clinical reasoning.

Results

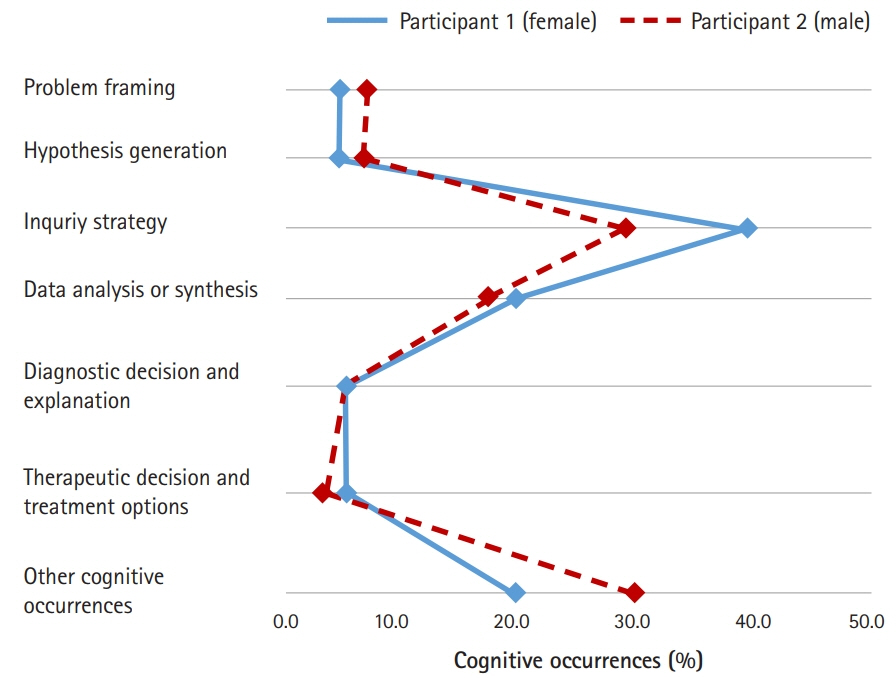

Both participants demonstrated similar proportional frequencies of clinical reasoning patterns on the same clinical assessments. The results also revealed that the three different assessment types may stimulate different patterns of clinical reasoning. For example, the CPX strongly promoted the participants’ reasoning related to inquiry strategy, while the MEQ strongly promoted hypothesis generation. Similarly, data analysis and synthesis by the participants were more strongly stimulated by the CBA than by the other assessment types.

Conclusion

This study found that different assessment designs stimulated different patterns of thinking during problem-solving. This finding can contribute to the search for ways to improve current clinical assessments. Importantly, the research method used in this study can be utilized as an alternative way to examine the validity of clinical assessments.

Keyword

Figure

Cited by 1 articles

-

Newly appointed medical faculty members’ self-evaluation of their educational roles at the Catholic University of Korea College of Medicine in 2020 and 2021: a cross-sectional survey-based study

Sun Kim, A Ra Cho, Chul Woon Chung, Sun Huh

J Educ Eval Health Prof. 2021;18:28. doi: 10.3352/jeehp.2021.18.28.

Reference

-

References

1. Cho JJ, Kim JY, Park HK, Hwang IH. Correlation of CPX scores with the scores on written multiple-choice examinations on the certifying examination for family medicine in 2009 to 2011. Korean J Med Educ. 2011; 23:315–322. https://doi.org/10.3946/kjme.2011.23.4.315.

Article2. Hwang JY, Jeong HS. Relationship between the content of the medical knowledge written examination and clinical skill score in medical students. Korean J Med Educ. 2011; 23:305–314. https://doi.org/10.3946/kjme.2011.23.4.305.

Article3. Harden RM, Stevenson M, Downie WW, Wilson GM. Assessment of clinical competence using objective structured examination. Br Med J. 1975; 1:447–451. https://doi.org/10.1136/bmj.1.5955.447.

Article4. Harden RM. Revisiting ‘assessment of clinical competence using an objective structured clinical examination (OSCE)’. Med Educ. 2016; 50:376–379. https://doi.org/10.1111/medu.12801.

Article5. Irwin WG, Bamber JH. The cognitive structure of the modified essay question. Med Educ. 1982; 16:326–331. https://doi.org/10.1111/j.1365-2923.1982.tb00945.x.

Article6. Palmer EJ, Devitt PG. Assessment of higher order cognitive skills in undergraduate education: modified essay or multiple choice questions?: research paper. BMC Med Educ. 2007; 7:49. https://doi.org/10.1186/1472-6920-7-49.

Article7. Choi I, Hong YC, Park H, Lee Y. Case-based learning for anesthesiology: enhancing dynamic decision-making skills through cognitive apprenticeship and cognitive flexibility. In : Luckin R, Goodyear P, Grabowski B, Puntambeker S, Underwood J, Winters N, editors. Handbook on design in educational technology. New York (NY): Routledge;2013. p. 230–240.8. Hwang K, Lee YM, Baik SH. Clinical performance assessment as a model of Korean medical licensure examination. Korean J Med Educ. 2001; 13:277–287. https://doi.org/10.3946/kjme.2001.13.2.277.

Article9. Klein GA, Calderwood R, Macgregor D. Critical decision method for eliciting knowledge. IEEE Trans Syst Man Cybern. 1989; 19:462–472. https://doi.org/10.1109/21.31053.

Article10. Barrows HS. Problem-based learning applied to medical education. Springfield (IL): Southern Illinois University;1994.11. Barrows HS, Tamblyn RM. Problem-based learning: an approach in medical education. New York (NY): Springer Publishing Company;1980.12. Crandall B, Klein G, Hoffman RR. Working minds: a practitioner’s guide to cognitive task analysis. Cambridge (MA): The MIT Press;2006.13. Hoffman RR, Crandall B, Shadbolt N. Use of the critical decision method to elicit expert knowledge: A case study in the methodology of cognitive task analysis. Hum Factors. 1998; 40:254–276. https://doi.org/10.1518/001872098779480442.

Article

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- A Trial of Surgical Clerkship for Developing Clinical Competency

- A Web-Based Performance Assessment Model for OSCE (Objective Structured Clinical Examination)

- Educational implications of assessing learning outcomes with multiple choice questions and short essay questions

- Effect of Simulation-based Practice by applying Problem based Learning on Problem Solving Process, Self-confidence in Clinical Performance and Nursing Competence

- Comparison of Self-assessment and Objective Structured Clinical Examination (OSCE) of Medical Students' Clinical Performance