J Educ Eval Health Prof.

2015;12:35. 10.3352/jeehp.2015.12.35.

Proposal of a linear rather than hierarchical evaluation of educational initiatives: the 7Is framework

- Affiliations

-

- 1Sapphire Group, Department of Health Sciences, University of Leicester, Leicester, United Kingdom. dr98@le.ac.uk

- 2Paediatric Emergency Medicine Leicester Academic Group, University Hospitals of Leicester NHS Trust, Leicester, United Kingdom.

- KMID: 2402042

- DOI: http://doi.org/10.3352/jeehp.2015.12.35

Abstract

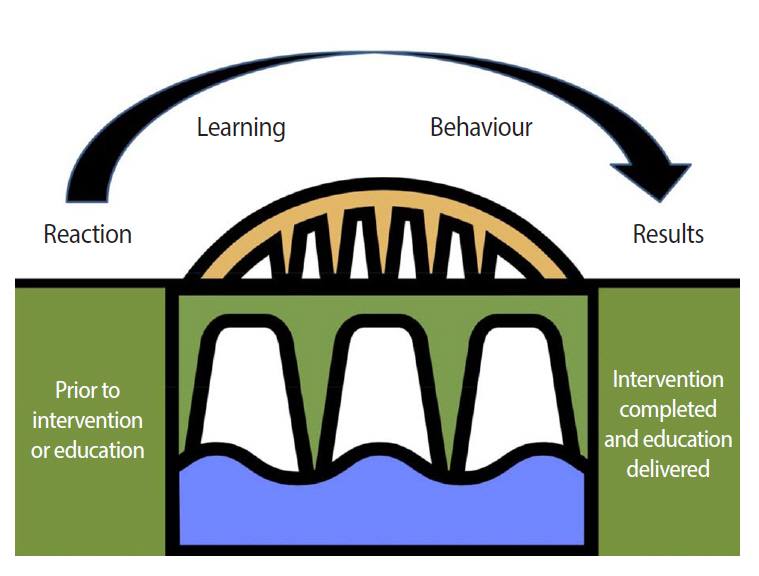

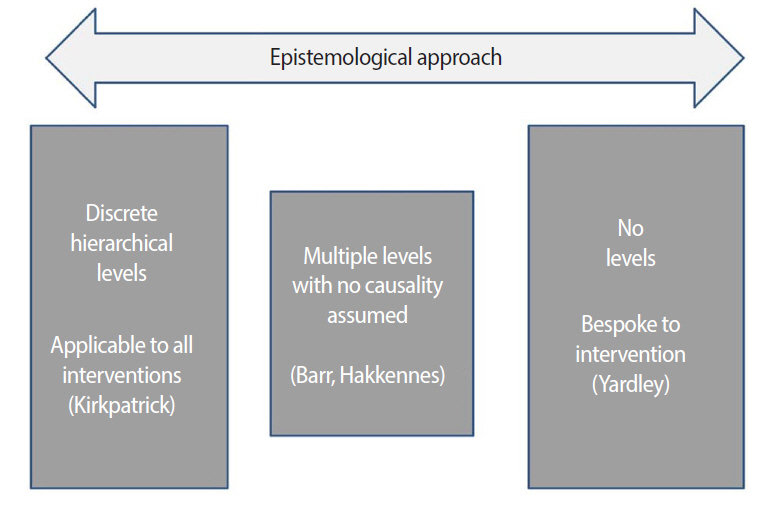

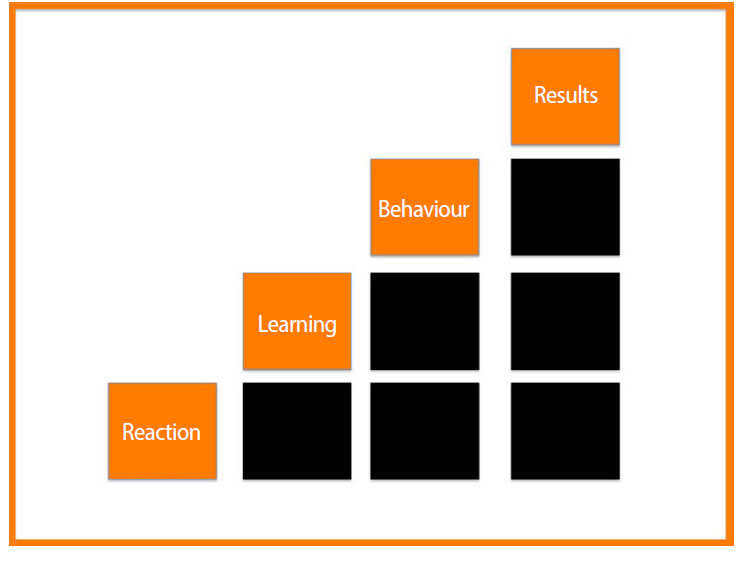

- Extensive resources are expended attempting to change clinical practice; however, determining the effects of these interventions can be challenging. Traditionally, frameworks to examine the impact of educational interventions have been hierarchical in their approach. In this article, existing frameworks to examine medical education initiatives are reviewed and a novel '7Is framework' discussed. This framework contains seven linearly sequenced domains: interaction, interface, instruction, ideation, integration, implementation, and improvement. The 7Is framework enables the conceptualization of the various effects of an intervention, promoting the development of a set of valid and specific outcome measures, ultimately leading to more robust evaluation.

Figure

Cited by 1 articles

-

Changes in academic performance in the online, integrated system-based curriculum implemented due to the COVID-19 pandemic in a medical school in Korea

Do-Hwan Kim, Hyo Jeong Lee, Yanyan Lin, Ye Ji Kang, Sun Huh

J Educ Eval Health Prof. 2021;18:24. doi: 10.3352/jeehp.2021.18.24.

Reference

-

1. Nylenna M, Aasland OG. Doctors’ learning habits: CME activities among Norwegian physicians over the last decade. BMC Med Educ. 2007; 7:10. http://dx.doi.org/10.1186/1472-6920-7-10.

Article2. Roberts T. Learning responsibility?: exploring doctors’ transitions to new levels of performance. ESRC End of Project Report. 2009.3. Graham D, Thomson A, Gregory S. Liberating learning [Internet]. Milton Keynes: Norfolk House East;2009. [cited 2015 Apr 24] Available from: http://www.nact.org.uk/getfile/2173.4. Kirkpatrick D. Evaluation. In : Craig RL, Bittel LR, editors. Training and development handbook. American Society for Training and Development. New York: McGraw-Hill;1967.5. Kirkpatrick D. Evaluation of training. In : Craig RL, editor. Training and development handbook: a guide to human resource development. New York: McGraw-Hill;1976. p. 317.6. Kirkpatrick D, Kirkpatrick J. Evaluating training programs: the four levels. 3rd ed. San Francisco: Berrett-Koehler Publishers Inc.;2006.7. Cook DA, West CP. Perspective: reconsidering the focus on “outcomes research” in medical education: a cautionary note. Acad Med. 2013; 88:162–167. http://dx.doi.org/10.1097/ACM.0b013e31827c3d78.8. Morrison J. ABC of learning and teaching in medicine: evaluation. BMJ. 2003; 326:385–387. http://dx.doi.org/10.1136/bmj.326.7385.385.

Article9. Barr H, Freeth D, Hammick M, Koppel I, Reeves S. Evaluations of interprofessional education: a United Kingdom review for health and social care [Internet]. London: Centre for the Advancement of Interprofessional Education;2000. [cited 2015 Apr 24]. Available from: http://caipe.org.uk/silo/files/evaluations-of-interprofessional-education.pdf.10. Association for Medical Education in Europe. Best Evidence Medical Education [Internet]. Dundee: Association for Medical Education in Europe;2013. [cited 2015 Apr 24]. Available from: http://www.bemecollaboration.org/.11. Association for Medical Education in Europe. BEME coding sheet [Internet]. Dundee: Association for Medical Education in Europe;2005. [cited 2015 Apr 24]. Available from: http://www.bemecollaboration.org/downloads/749/beme4_appx1.pdf.12. Belfield C, Thomas H, Bullock A, Eynon R, Wall D. Measuring effectiveness for best evidence medical education: a discussion. Med Teach. 2001; 23:164–170. http://dx.doi.org/10.1080/0142150020031084.

Article13. Moore DE Jr, Green JS, Gallis HA. Achieving desired results and improved outcomes: integrating planning and assessment throughout learning activities. J Contin Educ Health Prof. 2009; 29:1–15. http://dx.doi.org/10.1002/chp.20001.

Article14. Miller GE. The assessment of clinical skills/competence/performance. Acad Med. 1990; 65(9 Suppl):S63–S67.

Article15. Horsley T, Grimshaw J, Campbell C. Maintaining the competence of Europe’s workforce. BMJ. 2010; 341:c4687. http://dx.doi.org/10.1136/bmj.c4687.

Article16. Campbell C, Silver I, Sherbino J, Cate OT, Holmboe ES. Competency-based continuing professional development. Med Teach. 2010; 32:657–662. http://dx.doi.org/10.3109/0142159X.2010.500708.

Article17. Hakkennes S, Green S. Measures for assessing practice change in medical practitioners. Implement Sci. 2006; 1:29. http://dx.doi.org/10.1186/1748-5908-1-29.

Article18. Lemmens KM, Nieboer AP, van Schayck CP, Asin JD, Huijsman R. A model to evaluate quality and effectiveness of disease management. Qual Saf Health Care. 2008; 17:447–453. http://dx.doi.org/10.1136/qshc.2006.021865.

Article19. Yardley S, Dornan T. Kirkpatrick’s levels and education ‘evidence’. Med Educ. 2012; 46:97–106. http://dx.doi.org/10.1111/j.1365-2923.2011.04076.x.

Article20. Byington CL, Reynolds CC, Korgenski K, Sheng X, Valentine KJ, Nelson RE, Daly JA, Osguthorpe RJ, James B, Savitz L. Costs and infant outcomes after implementation of a care process model for febrile infants. Pediatrics. 2012; 130:e16–e24. http://dx.doi.org/10.1542/peds.2012-0127.

Article21. Bates R. A critical analysis of evaluation practice: the kirkpatrick model and the principle of beneficence. Eval Program Plann. 2004; 27:341–347. http://dx.doi.org/10.1016/j.evalprogplan.2004.04.011.

Article22. Holton EF. The flawed four level evaluation model. Human Resour Dev Q. 1996; 7:5–21. http://dx.doi.org/10.1002/hrdq.3920070103.23. Alliger GM, Tannenbaum SI, Bennett W, Trave H, Shotland A. A meta-analysis of the relations among training criteria. Person Psychol. 1997; 50:341–358. http://dx.doi.org/10.1111/j.1744-6570.1997.tb00911.x.

Article24. Alliger GM, Janak EA. Kirkpatricks’s level of training criteria: thirty years later. Person Psychol. 1989; 42:331–342. http://dx.doi.org/10.1111/j.1744-6570.1989.tb00661.x.25. Abernathy D. Thinking outside the evaluation box. Train Dev. 1999; 53:19–23.26. Buckley LL, Goering P, Parikh SV, Butterill D, Foo EK. Applying a ‘stages of change’ model to enhance a traditional evaluation of a research transfer course. J Eval Clin Pract. 2003; 9:385–390. http://dx.doi.org/10.1046/j.1365-2753.2003.00407.x.

Article27. Tian J, Atkinson N, Portnoy B, Gold R. A systematic review of evaluation in formal continuing medical education. J Contin Educ Health Prof. 2007; 27:16–27. http://dx.doi.org/10.1002/chp.89.

Article28. Kahen B. Excerpts from review of evaluation frameworks. Regina: Saskatchewan Ministry of Education;2008.29. Recker J. Conceptual model evaluation: towards more paradigmatic rigor. In : Castro J, Teniente E, editors. Proceedings of the CAISE’05 Workshops. 2005. Jun. 13-17. Porto, Portugal. Porto: Faculdade de Engenharia da Universidade do Porto; 2005.30. Roland D, Charadva C, Coats T, Matheson D. Determining the effectiveness of educational interventions in paediatric emergency care. Emerg Med J. 2014; 31:787–788. http://dx.doi.org/10.1136/emermed-2014-204221.25.

Article

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Development of a Program Evaluation Framework for Improving the Quality of Undergraduate Medical Education

- Developing a framework for evaluating the impact of Healthcare Improvement Science education across Europe: a qualitative study

- Evaluation Framework for Telemedicine Using the Logical Framework Approach and a Fishbone Diagram

- Proposal of the Implementation of an International Pharmacy Graduate Preliminary Examination

- Establishing a Policy Framework for the Primary Prevention of Occupational Cancer: A Proposal Based on a Prospective Health Policy Analysis